Addressing the challenges of publication bias with RCT registration

In social science, as in other disciplines, a pattern of publication bias has emerged: Studies that show positive results are often more likely to get published. This is a problem because policymakers use results from randomized impact evaluations, also known as randomized controlled trials (RCTs), to inform their decisions.

It's therefore possible that a published study found a social policy or program to be effective, but several other unpublished studies testing a similar program may have found it to be ineffective.

This presents risks for program designers and policymakers: They may use the published results of a study to inform implementation or scale-up of a new program, not knowing that other studies testing the same program found opposite results. Millions of dollars of government, aid, and philanthropic funds could be wasted, and the introduction of truly effective policies could be unnecessarily delayed.

Until recently, we knew very little about RCTs in the social sciences if they did not get published. Cue the birth of the American Economic Association's (AEA) registry for randomized controlled trials.

In April 2012, the AEA signed on to develop and host a registry for RCTs in the field of economics and social sciences more broadly. The organization felt it was essential to provide access to the entire body of evaluations being conducted by researchers, including the evaluations that fail in implementation or have null or negative findings.

This counteracts the problem of publication bias because it allows researchers and policymakers to have a better understanding of how many times a particular type of program has been evaluated and who is conducting the evaluation.

We manage the registry on behalf of the AEA here at J-PAL. Our experience illustrates some promising behavioral shifts in the realm of trial registration and research transparency, as well as some challenges that are common across disciplines.

Here are four of our most useful takeaways:

1. Registering a research design is becoming a standard in social science.

The AEA RCT Registry has seen tremendous growth in the number of registered studies, not coincidentally spiking (and staying high) after David McKenzie’s 2013 World Bank Development Impact blog on trying out the new trial registries. Since the launch of the registry, the practice of registration has become a de-facto standard in many branches of the social sciences.

As of today, there are 1,579 trials registered with study locations across 115 countries. There are over 2,400 registered accounts, with around 1,600 active users per month. The chart below shows growth of registration over time.

Some of this increase is driven by incentives and mandates for study registration. Over the years, a number of organizations and funders have begun to require or strongly encourage registration for randomized evaluations.

For instance, the National Bureau of Economic Research (NBER) encourages registration by providing a reminder about the registry when RCT-based studies are submitted for review by their institutional review board, and also when RCT-based working papers are submitted through their site (if they haven’t been registered already). The Innovation Growth Lab requires registration for all studies that they fund. J-PAL and Innovations for Poverty Action (IPA) have also made registration standard operating procedure for all new and ongoing studies since 2013.

A growing number of philanthropic funders, including the Laura and John Arnold Foundation, also require registration.

2. Pre-registrations are increasing.

More studies are being registered before their intervention start date. On the AEA Registry, when a trial gets registered before the start of its intervention, that trial receives an orange clock badge

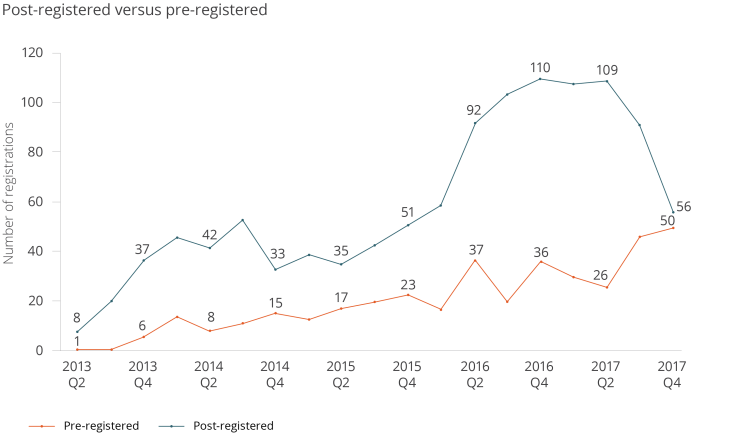

Of the currently registered 1,579 studies, 422 have been pre-registered. In the last three months of 2017, we saw the highest number of pre-registered studies - 50 - of any quarter since the registry started. The chart below shows the number of new pre-registered and post-registered studies per quarter over time.

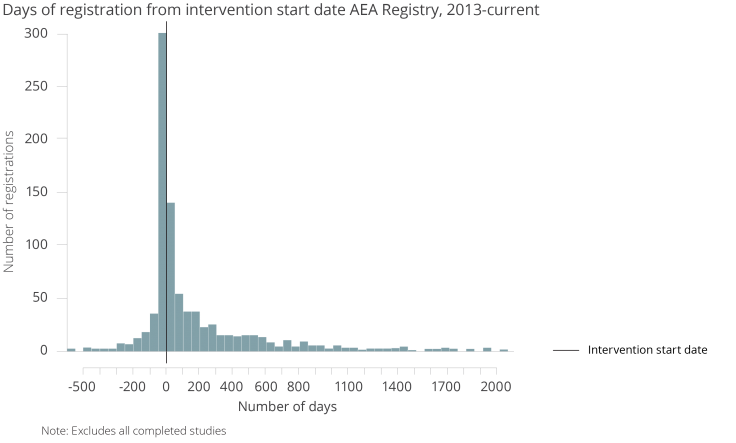

As the next chart shows, it is common for registration to occur in the 50-day period prior to the intervention start date.

3. Registered pre-analysis plans are increasing.

Use of pre-analysis plans (PAP's) to map out hypotheses being tested in a study is becoming increasingly common in economics and other social sciences. On the AEA registry, uploading a pre-analysis plan is optional. As J-PAL affiliate Ben Olken (MIT) points out in a 2015 paper, pre-analysis plans have benefits and costs.

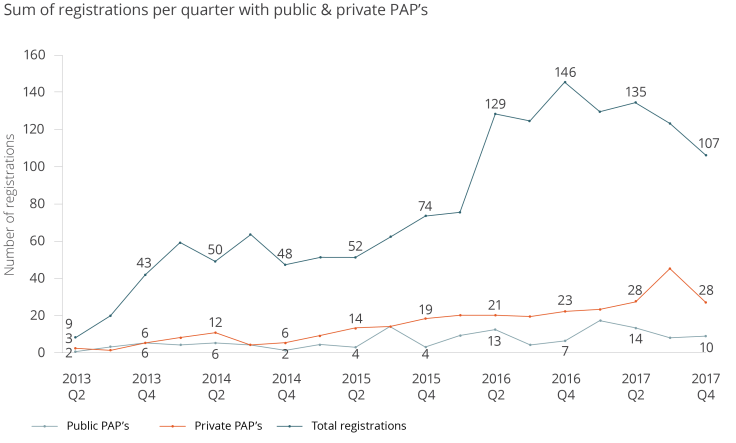

We can measure the growth of pre-analysis plans through analyzing registry data. The data shows us how many researchers have registered pre-analysis plans along with their studies. Researchers have the option of making the pre-analysis plan public (effective immediately), or private (hidden until trial end date).

We find that nearly 30 percent of all registered studies on the AEA registry have a pre-analysis plan: 463 out of the 1,553 total studies (as of January 25).

4. Post-trial reporting is still a challenge.

Obtaining post trial information at the end of a study is a challenge that all registries face. In ClinicalTrials.gov, where most studies are congressionally mandated to report study results, it was found that only 22 percent reported results within one year of the trial completion date. For trials in which results reporting was not congressionally mandated, only 10 percent had reported results within one year of the trial completion date.

On the AEA RCT registry, where results reporting is voluntary, only 21 percent of all studies that have passed their trial end date, regardless of registry status, have reported some type of post-trial information.

We suspect that not all post-trial information is reported by researchers, so to get a better sense of how many registry entries have published papers, we drew a random sample of forty studies that have a trial end date of December 1, 2016 or earlier and searched for them on the researcher’s website and via a Google search. Out of the forty studies sampled:

• 10 have published papers or papers that are forthcoming,

• 7 have working papers, and

• 23 have neither.

Out of these forty studies, only six had filled out the post-trial section of the registry in some capacity. Of those six, none reported a paper on the registry, although we found that three of the six indeed did have a paper (ie. one published paper, two working papers).

To increase the quality of post-trial data on completed studies, we plan to experiment with systems that encourage increased compliance with post-trial reporting.

In the meantime, the registry is only as meaningful as it is comprehensive. We highly encourage all researchers to register their RCTs! Signing up takes two minutes and it’s free.

Not conducting an RCT? There are other social science registries for non-RCT studies, such as 3ie’s RIDIE registry, EGAP’s registry, The Center for Open Science Pre-Registration Challenge, and AsPredicted, to name a few.

AEA RCT Registry data can be downloaded into a CSV file from the advanced search page. Just look for this button:

Have feedback to share? E-mail us at [email protected].