Procédures de sécurité des données pour les chercheurs

Summary

Cette ressource propose une introduction aux principes fondamentaux de la sécurité des données, met en contexte les problématiques en matière de sécurité des données qui sont particulièrement pertinentes dans le cadre d’une évaluation aléatoire utilisant des données administratives et/ou d’enquête au niveau individuel, et explique comment décrire les procédures de sécurité des données pour un Institutional Review Board (IRB) ou dans le cadre d’une demande d’utilisation des données. Ce document n’est pas un guide exhaustif ou définitif en matière de sécurité des données, et il ne peut en aucun cas remplacer les conseils d’experts professionnels en sécurité des données, ni se substituer aux exigences en vigueur.

Panorama des questions de sécurité des données

Garantir la sécurité des données est indispensable pour protéger les données confidentielles, respecter la vie privée des sujets de recherche et se conformer aux protocoles et aux exigences en vigueur. Même des données en apparence désidentifiées peuvent être ré-identifiées si elles incluent suffisamment de caractéristiques uniques.1 Or, la divulgation de ces informations peut avoir des conséquences néfastes inattendues. Par exemple, l’informaticien Arvind Narayanan a réussi à ré-identifier un ensemble de données désidentifiées à usage public provenant de Netflix. Sur cette base, il a réussi à déduire les préférences politiques des utilisateurs et d’autres informations potentiellement sensibles (Narayanan et Shmatikov 2008).

De nombreuses universités de recherche proposent une assistance et des conseils en matière de sécurité des données par l’intermédiaire de leur service informatique et du personnel spécialement chargé de l’informatique au sein de leurs départements académiques. Les chercheurs qui souhaitent mettre en place des mesures de sécurité des données sont invités à consulter le personnel du service informatique de leur établissement d’origine, qui pourra leur recommander des logiciels spécifiques et leur apporter un soutien technique.

En plus de consulter des experts en sécurité des données, les chercheurs doivent acquérir une connaissance pratique des questions de sécurité des données afin de garantir l’intégration harmonieuse des mesures de sécurité à leur processus de recherche, ainsi que le respect des protocoles de sécurité des données qui s’appliquent. Les chercheurs doivent également s’assurer que leurs assistants de recherche, leurs étudiants, les partenaires de mise en œuvre et les fournisseurs de données ont une bonne compréhension fondamentale des protocoles de sécurité des données.

Les mesures de sécurité des données doivent être adaptées au risque de préjudice associé à une éventuelle violation des données et doivent intégrer toutes les exigences imposées par le fournisseur de données. Le système de classification du degré de sensibilité des données mis au point par l’université de Harvard et les exigences qui en découlent en matière de sécurité des données illustrent la manière dont ce calibrage peut fonctionner dans la pratique.2

Atteintes à la sécurité des données : causes et conséquences

Une atteinte à la sécurité des données peut avoir de graves conséquences pour les sujets de recherche, l’établissement d’origine du chercheur et le chercheur lui-même. Les sujets de la recherche risquent de voir des informations sensibles et identifiables les concernant divulguées contre leur gré, ce qui les expose à des risques d’usurpation d’identité, d’embarras ainsi qu’à des préjudices financiers, émotionnels ou autres. L’établissement d’origine du chercheur et le chercheur lui-même risquent quant à eux de voir leur réputation entachée et d’avoir plus de difficultés à accéder à des données sensibles par la suite. Il est probable qu’une telle violation entraîne la mise en place d’exigences supplémentaires en matière de conformité, et notamment le signalement de l’atteinte à la sécurité des données à l’Institutional Review Board (IRB) ainsi que, dans certaines circonstances, à chacun des individus dont les données ont été compromises. Le fournisseur de données peut exiger le renforcement des mesures de sécurité, voire révoquer l’accès aux données. Dans certains cas, la responsabilité financière et/ou pénale envers le fournisseur de données et/ou les sujets de recherche est engagée.3

Les données sensibles sont vulnérables à la fois aux risques de divulgation accidentelle et aux attaques ciblées

Si les protocoles de sécurité des données ne sont pas respectés, des données peuvent être divulguées par e-mail, suite à la perte d’un appareil, par le biais d’un logiciel de partage de fichiers comme Google Drive, Box ou Dropbox, ou suite au mauvais effacement des fichiers sur du matériel informatique qui a ensuite été recyclé, donné ou jeté. Tout matériel informatique qui entre en contact avec les données de l’étude doit rester protégé, y compris les ordinateurs portables, les ordinateurs de bureau, les disques durs externes, les clés USB, les téléphones portables et les tablettes. Un vol ou une cyber-attaque peut par ailleurs cibler le jeu de données spécifique d’un chercheur, ou viser plus généralement son établissement d’origine et récupérer par inadvertance l’ensemble de données du chercheur dans le cadre de l’attaque. Les données sensibles doivent être protégées contre toutes ces menaces

Limiter les menaces qui pèsent sur la sécurité des données

Le fait de limiter au maximum les contacts de l’équipe de recherche avec les données sensibles et personnellement identifiables réduit considérablement les dommages potentiels que peut causer une atteinte à la sécurité des données, et limite donc aussi les mesures de sécurité qui doivent être mises en place. Cela permet souvent de simplifier et d’accélérer le flux des données de recherche.

Pour réduire ex ante le niveau de risque en matière de sécurité des données, veillez à ne vous procurer et à ne manipuler que les données sensibles qui sont strictement nécessaires à l’étude de recherche. Les chercheurs peuvent, par exemple, demander au fournisseur de données ou à un tiers de confiance de se charger d’apparier des données individualisées particulièrement sensibles avec le statut de traitement individuel et les mesures des variables de résultats, de façon à ce que les chercheurs eux-mêmes n'aient pas besoin de manipuler ni de stocker les données sensibles.

Désidentifier les données

Séparer le plus tôt possible les informations d’identification personnelle de toutes les autres données

C’est lorsque des informations sensibles ou confidentielles sont directement liées à des individus identifiables que les données présentent le risque le plus élevé. Une fois dissociés, le jeu de données « d’identification » et le jeu de données « d’analyse » doivent être stockés séparément, analysés séparément et transférés séparément.4 De plus, suite à cette séparation, les éléments d’identification doivent rester chiffrés en permanence et les deux jeux de données ne doivent être remis en correspondance que si cela s’avère nécessaire pour ajuster la technique d’appariement des données. Les tableaux 1, 2 et 3 illustrent cette séparation. J-PAL met à disposition des programmes permettant de rechercher les informations d’identification personnelle sous Stata et sous R sur un dépôt GitHub.

| Nom | Numéro de sécurité sociale | Date de naissance | Revenu | État | Diabétique ? |

|---|---|---|---|---|---|

| Jane Doe | 123-45-6789 | 01/05/1950 | $50,000 | Floride | O |

| John Smith | 987-65-4321 | 01/07/1975 | $43,000 | Floride | N |

| Bob Doe | 888-67-1234 | 01/01/1982 | $65,000 | Géorgie | N |

| Adam Jones | 333-22-1111 | 23/08/1987 | $43,000 | Floride | O |

| Nom | Numéro de sécurité sociale | Identifiant de l’étude |

|---|---|---|

| Jane Doe | 123-45-6789 | 1 |

| John Smith | 987-65-4321 | 2 |

| Bob Doe | 888-67-1234 | 3 |

| Adam Jones | 333-22-1111 | 4 |

| Identifiant de l’étude | Revenu | État | Diabétique ? |

|---|---|---|---|

| 1 | $50,000 | Floride | O |

| 2 | $43,000 | Floride | N |

| 3 | $65,000 | Géorgie | N |

| 4 | $43,000 | Floride | O |

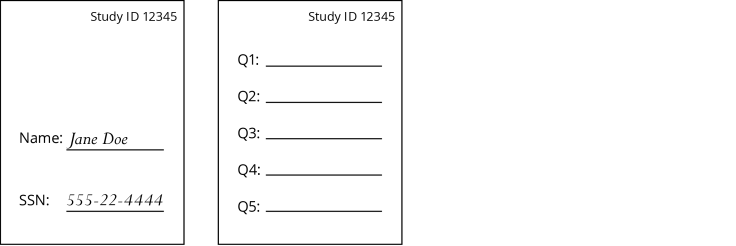

Les enquêtes au format papier doivent également être conçues de façon à pouvoir en retirer les informations d’identification personnelle. Voir la Figure 1 pour une maquette d’enquête. Tous les éléments d’identification directe, comme le nom ou le numéro de sécurité sociale, ainsi que les coordonnées, comme l’adresse ou le numéro de téléphone, doivent figurer sur une page de couverture distincte. Cette page de couverture, ainsi que le formulaire de consentement s’il y a lieu, doivent être détachés du questionnaire principal le plus tôt possible – idéalement dans les 24 heures qui suivent l’entretien. Comme nous l’expliquons plus bas, les participants vont se voir attribuer un identifiant d’étude (qui figure sur les deux sections de l’enquête) pour permettre à l’équipe de recherche d’apparier et de ré-identifier les données si nécessaire. Un tableau de correspondance permettra de faire le lien entre ces deux sections en utilisant l’identifiant de l'étude et devra être stocké dans un emplacement distinct des deux moitiés de l’enquête afin de garantir la confidentialité des données.

Pour conserver la possibilité de ré-identifier l’ensemble de données d’analyse, un « identifiant d’étude » unique peut être créé par le chercheur, le fournisseur de données ou le partenaire de mise en œuvre

Cet identifiant doit être créé par un procédé aléatoire, comme la création d’une liste numérotée après un tri des données sur la base d’un nombre aléatoire, ou par le biais d’un générateur de nombres aléatoires. Cet identifiant ne doit pas être :

- Basé sur n’importe quelle autre caractéristique des données, comme l’ordre numérique ou alphabétique, ni sur un brouillage ou un chiffrement des numéros de sécurité sociale ou de tout autre code d’identification unique.

- Une fonction mathématique arbitraire

- Un hachage cryptographique5

Voici l’une des méthodes possibles pour créer des identifiants d’étude :

- Créez un nombre aléatoire à partir d’une source physique (comme des dés) ou d’un générateur de nombres pseudo-aléatoires (par exemple sous Stata). Les générateurs de nombres aléatoires pouvant changer selon la version du logiciel, veillez à noter ou à spécifier la version de logiciel utilisée (en écrivant par exemple la commande « version 14 » dans le do-file Stata).

- Utilisez le premier nombre aléatoire comme « graine », ou valeur initiale, puis utilisez un générateur de nombres pseudo-aléatoires (sous Stata ou autre) pour trier les observations.

- Utilisez un autre nombre aléatoire comme deuxième « graine », puis un générateur de nombres pseudo-aléatoires pour créer un identifiant d’étude.

- Assurez-vous que chaque identifiant d’étude est bien unique (par exemple, en utilisant la commande -isid- sous Stata).

L’exercice de randomisation de J-PAL et d’IPA sous Stata inclut la création d’identifiants d’étude par ce procédé.

Selon la manière dont l’identifiant d’étude est créé, il peut être indispensable de maintenir une correspondance sécurisée (mise en correspondance/décodage) entre l’identifiant d'étude et les données d’identification personnelle. Ce tableau de correspondance doit être protégé pour deux raisons : pour garantir la confidentialité des données et pour éviter toute perte de données. Innovations for Poverty Action (IPA) propose sur GitHub un programme Stata accessible au public qui automatise l’opération de séparation des informations d’identification personnelle des autres données et la création d’un tableau de correspondance.

Stockage et accès aux données : le chiffrement

Pour sécuriser le stockage et l’accès aux données, plusieurs possibilités s’offrent aux chercheurs. Pour choisir parmi ces différentes options, il faut prendre en considération le degré de sensibilité des données, les exigences réglementaires en vigueur, le savoir-faire technique de l’équipe de recherche, la qualité de la connexion internet et la possibilité d’avoir accès à une expertise et à une assistance informatiques.

- Le chiffrement consiste à convertir les données en un code qui nécessite un mot de passe ou une paire de « clés » pour être décodé. Il est obligatoire pour tous les projets mis en œuvre dans le cadre de J-PAL.6 Les données peuvent être chiffrées à différents niveaux, à différentes étapes de leur cycle de vie, et par le biais de différents logiciels et équipements informatiques.

- Chiffrement au niveau de l’appareil (chiffrement complet du disque). Les ordinateurs, les clés USB, les tablettes, les téléphones portables et tout autre matériel informatique destiné au stockage de données et/ou à la collecte de données primaires peuvent faire l’objet d’un chiffrement complet du disque. Cette méthode protège tous les fichiers présents sur l’appareil et nécessite un mot de passe au démarrage de l’appareil. Pour les tablettes utilisées dans le cadre de la collecte de données primaires, il est par ailleurs possible d’utiliser une application comme AppLock pour empêcher les utilisateurs d’accéder à d’autres applications pendant la collecte de données. Cela permet d’éviter tout transfert de données d’une application à l’autre. L’équipe de recherche doit également activer les fonctions d’effacement à distance sur ces appareils en cas de perte ou de vol.7 Une fois la procédure d’installation et de mise en œuvre effectuée, le chiffrement complet du disque n’a normalement aucune incidence matérielle sur l’expérience utilisateur. Les méthodes et les logiciels disponibles pour le chiffrement complet du disque varient selon le type de matériel informatique concerné. Nous recommandons aux chercheurs de contacter le service informatique de leur établissement pour demander des conseils ou de l’aide.

- Stockage cloud. De nombreux services de stockage cloud, dont Dropbox, Box et Google Drive, ont configuré leurs plateformes de façon à respecter différentes réglementations sectorielles, fédérales et internationales visant à garantir la sécurité des fichiers sur le cloud (même si les données doivent rester chiffrées à tous les points d’extrémité). Certains de ces services proposent aux utilisateurs la possibilité de passer à une version supérieure pour accéder à un niveau ou à un type spécifique de sécurité des données qui soit conforme à des réglementations comme la loi HIPAA (Health Insurance Portability and Accountability Act) ou la loi FERPA (Family Educational Rights and Privacy Act). En l’absence d’un accord formel garantissant que les données seront stockées conformément à un ensemble précis de réglementations, ou d’un chiffrement supplémentaire au niveau des fichiers, le simple stockage des fichiers via ce type de services ne constitue pas une option pleinement sécurisée pour les données sensibles. Avant de conclure un accord avec un fournisseur de services de stockage cloud, il est indispensable de consulter le fournisseur de données initial et l’IRB chargé de l’examen du projet. Pour plus d’informations sur les services de stockage cloud et la sécurité, voir :

- Chiffrement des dossiers Bien que les services de stockage cloud chiffrent la connexion et les fichiers « au repos » sur leur système, ils conservent les clés de chiffrement, ce qui donne techniquement à leurs employés un accès en lecture à tous les fichiers sauvegardés sur leurs serveurs. Pour remédier à ce problème, des outils comme Boxcryptor et VeraCrypt8 permettent de chiffrer les fichiers avant leur stockage dans le cloud. Boxcryptor fonctionne sur la base d’un abonnement payant, tandis que VeraCrypt est gratuit et open source.

- Chiffrement des fichiers. Le chiffrement complet du disque ou de l’appareil permet de chiffrer tous les fichiers présents sur un appareil donné, mais ne protège pas ces fichiers lorsqu’ils quittent l'appareil, par exemple lorsqu’ils sont en transit ou qu’ils sont partagés avec un autre chercheur. Les techniques de chiffrement de fichier s’appliquent à des fichiers spécifiques et facilitent ainsi le partage des données. Pour utiliser ces techniques de façon adéquate, il est nécessaire de mettre en place des protocoles robustes pour le partage des mots de passe ainsi que pour le déverrouillage et le reverrouillage des fichiers avant et après leur utilisation. Pour chiffrer des fichiers, on peut notamment utiliser PGP-Zip[!9] et 7-zip.

- Solutions gérées par le service informatique. Pour les chercheurs qui peuvent faire appel à un service spécialisé en informatique et dont l’équipe a accès à une connexion Internet fiable et rapide, il peut être préférable d’opter pour des solutions directement gérées par le service informatique. Cette option permet aux chercheurs de déléguer à des experts en informatique la gestion de la solution d’accès aux données et de stockage de ces dernières. En outre, les administrateurs informatiques sont parfois en mesure de fournir plusieurs niveaux supplémentaires de protection des données. En revanche, comme dans le cas du stockage cloud, le personnel informatique peut avoir accès à toutes les données présentes sur un serveur donné, y compris les informations d’identification personnelle. Les chercheurs doivent donc veiller à savoir qui a accès aux données et à garder autant que possible un contrôle direct sur celles-ci afin d’éviter tout problème de conformité et toute atteinte accidentelle à la protection des données.

Certains établissements peuvent proposer de l’espace sur un serveur ou mettre à disposition un emplacement pour héberger un serveur. Il peut être préférable de stocker les données sur ce type de serveur plutôt que d’avoir recours au stockage cloud sur des ordinateurs portables ou de bureau. Selon l’établissement, le service informatique peut être en mesure de proposer des options supplémentaires, comme un accès à distance sécurisé pour les utilisateurs hors campus, la sauvegarde automatique et sécurisée des données et le chiffrement.

L’accès à ces serveurs se fait généralement de façon automatique par le biais d’une connexion Internet officielle de l’établissement. L’accès hors site nécessite l’utilisation d’un réseau privé virtuel (VPN). Cette solution peut offrir des garanties de sécurité supplémentaires en chiffrant toutes les connexions réseau et en exigeant au moins deux types d’authentification (par exemple, un mot de passe et un code envoyé par SMS). Il reste toutefois nécessaire de chiffrer les données aux deux points d’extrémité : en d’autres termes, le serveur ou les fichiers hébergés sur le serveur doivent être chiffrés, et toutes les données transférées vers ou depuis le serveur vers un autre serveur ou vers un disque dur doivent être chiffrées à ces endroits.

D’autres options peuvent également être disponibles sur demande, notamment :

- La configuration de délais d’inactivité pour les accès à distance.

- Des mots de passe non récupérables. Si un utilisateur oublie son mot de passe, le mot de passe est réinitialisé par le système, plutôt que de renvoyer le mot de passe d’origine.

- Des paramètres d’expiration du mot de passe qui imposent d’en créer régulièrement un nouveau.

- La limitation du nombre de tentatives de saisie du mot de passe autorisées avant le verrouillage du compte.

- Un journal des accès détaillant qui s’est connecté, depuis quel endroit et à quel moment.

Les responsables informatiques ou les gestionnaires de données sont parfois en mesure d’accorder à certains utilisateurs des autorisations d’accès à certains fichiers ou à certains dossiers spécifiques sur le serveur. Ce niveau de contrôle permet d’accorder l’accès à un dossier donné à l’ensemble de l’équipe tout en restreignant l’accès aux données identifiées à un sous-ensemble spécifique de l’équipe. Consultez les recommandations officielles de votre service informatique en matière de mots de passe et d’autorisations, comme les politiques IS&T pour les projets du MIT.

Transmission et partage des données

Les données doivent être protégées aussi bien lorsqu’elles sont au repos que lorsqu’elles sont en transit entre le fournisseur de données, les membres de l’équipe de recherche et les partenaires. Les données qui sont chiffrées au repos sur un ordinateur portable dont le disque est entièrement chiffré, ou sur un serveur sécurisé, ne sont pas nécessairement protégées lors de leur transfert. Les options présentées ci-dessous n’offrent pas toutes le même niveau de sécurité.

Parmi les modes de transmission non sécurisés, on peut citer :

- Les e-mails non chiffrés

- Le chargement de données non chiffrées sur Dropbox ou sur Box (même si les données sont supprimées très rapidement par la suite). Voir la rubrique consacrée au Stockage cloud dans la section Stockage et accès aux données.

- L’envoi de périphériques non chiffrés par voie postale (par exemple des CD, des clés USB ou des disques durs externes).

- Les fichiers Excel protégés par mot de passe.

Parmi les modes de transmission plus sécurisés, on peut citer :

- Le protocole SFTP (Secure Shell File Transfer Protocol), y compris Secure Shell (SSH) ou Secure Copy (SCP).

- De nombreuses universités proposent la prise en charge de SFTP pour SecureFX.

- Le chargement d’un fichier chiffré sur Dropbox ou Box.

- L’envoi par e-mail d’un fichier chiffré, en partageant le mot de passe séparément et de manière sécurisée.

- L’envoi par courrier de fichiers chiffrés stockés sur des appareils chiffrés.

- Un logiciel d’enquête doté de fonctions de chiffrement, comme SurveyCTO, qui prend en charge le chiffrement pendant la collecte et la transmission des données vers un serveur central.

Communication et partage de données avec les partenaires

De nombreux partenaires de recherche, comme les prestataires de services, les enquêteurs et les détenteurs de données administratives, n’ont qu’une expérience très limitée en matière de protocoles de sécurité ou de partage de données. Une bonne pratique consiste donc à élaborer un protocole de partage et de sécurité des données en collaboration avec ces partenaires en aidant ces derniers à comprendre leur rôle en matière de sécurité des données. Tous les partenaires qui sont amenés à manipuler ou à transmettre des données doivent être informés des politiques de collecte, de stockage et de transfert de données définies dans le cadre de l’étude, et suivre une formation à ce sujet. Demandez aux partenaires de notifier systématiquement l’équipe de recherche avant de partager des données afin de s’assurer du respect du protocole. Dans le cadre de leurs communications en interne et avec leurs partenaires, les équipes doivent utiliser les identifiants de l’étude plutôt que les informations d’identification personnelle. Envisagez d’élaborer des procédures opérationnelles normalisées pour contrôler le respect de la méthode approuvée de partage des données et pour intervenir en cas de manquement, par exemple si les partenaires partagent des données de manière non sécurisée, ou si des données non autorisées sont communiquées aux chercheurs ou aux partenaires. Cela permettra au personnel de réagir rapidement en cas de violation du protocole. Des procédures opérationnelles normalisées doivent inclure les éléments suivants :

- La procédure de partage des données et de réception des données actualisées.

- La procédure permettant de vérifier que l’ensemble de données ne contient pas d’informations non autorisées avant son téléchargement, si possible.

- Le calendrier de vérification des nouvelles données pour détecter les informations non autorisées ou les données d’identification personnelle.

- Un plan pour notifier toute personne à l’origine d’une violation du protocole et demander des mesures correctives afin d’éviter tout nouveau manquement.

- Les modalités de suppression et de destruction des fichiers qui contiennent des informations non autorisées.

Sécurité des appareils personnels

Les chercheurs et leur équipe peuvent prendre plusieurs mesures simples pour s’assurer que leurs appareils restent sécurisés et pour en minimiser les éventuels points faibles. Ces mesures sont les suivantes :

- Utiliser un économiseur d’écran verrouillé par mot de passe et activer la fonction de verrouillage en cas d’inactivité.

- Installer et mettre à jour un logiciel antivirus. Le MIT recommande actuellement Sophos ; il est possible que d’autres établissements soutiennent ou recommandent d’autres solutions. Pensez à faire les mises à jour nécessaires et configurez le logiciel pour qu’il effectue des vérifications régulières.

- Utiliser un pare-feu. La plupart des systèmes d’exploitation (y compris Windows 10, macOS et Linux) ont des pare-feux intégrés.

- Effectuer toutes les mises à jour des logiciels. La plupart des ordinateurs et des plateformes vérifient régulièrement si de nouvelles versions des logiciels sont disponibles. Les nouvelles versions sont souvent créées pour résoudre des failles de sécurité ou d’autres problèmes connus.

- N’installer et n’exécuter aucun programme dont la source n’est pas fiable.

Les services informatiques ont généralement des recommandations de logiciels pour protéger les appareils personnels et pourront éventuellement vous aider à les mettre à jour ou lancer des mises à jour automatiques.

Politique relative aux mots de passe

Pour garantir la sécurité des données, il est essentiel d’utiliser des mots de passe robustes. Il est recommandé d’utiliser un mot de passe différent pour chaque compte important. Par exemple, les mots de passe utilisés pour Dropbox, la messagerie électronique, les serveurs de l’établissement et les fichiers chiffrés doivent tous être différents.

Le National Institute of Standards and Technology (NIST) a publié en 2017 des lignes directrices révisées concernant les mots de passe. Ces directives et leur logique sous-jacente sont expliquées en termes plus accessibles dans un article de blog du personnel du NIST.

En général, un mot de passe robuste :

- Doit comporter au moins huit caractères, mais de préférence beaucoup plus.

- NE DOIT PAS contenir ou être composé uniquement des éléments suivants :

- Des mots du dictionnaire dans n’importe quelle langue, même en alternant les majuscules et les minuscules ou en remplaçant certaines lettres par des chiffres ou des symboles (par exemple, 1 pour l, @ pour a, 0 pour O).

- Le nom du service ou des termes associés.

- Votre nom, votre nom d’utilisateur, votre adresse e-mail, votre numéro de téléphone, etc.

- Des lettres ou des chiffres qui se répètent ou qui se suivent.

N’oubliez pas votre mot de passe

Les mots de passe robustes sont souvent difficiles à mémoriser. Lorsque vous utilisez certains logiciels, comme Boxcryptor, un mot de passe oublié est complètement irrécupérable et entraîne la perte de toutes les données du projet.

Stockez et partagez les mots de passe de manière sécurisée

Un fichier Excel non chiffré protégé par mot de passe ne constitue pas un moyen sécurisé de stocker ou de partager des mots de passe. Les mots de passe ne doivent jamais être partagés par le même moyen que le transfert de fichiers, ni par téléphone.

Les systèmes de stockage de mots de passe comme LastPass sont une solution sécurisée pour créer, gérer et stocker des mots de passe en ligne. Sur cette page web et cette application mobile, il est également possible de partager en toute sécurité des notes et des mots de passe avec des collaborateurs spécifiques. Conserver une copie papier de la liste des mots de passe dans un coffre-fort est une autre option sécurisée.

Éviter la perte de données

En plus de se prémunir contre les menaces extérieures, il est également indispensable de prévenir les pertes de données, autre aspect essentiel de la sécurité des données. Les données et les tableaux de correspondance entre les identifiants de l’étude et les informations d’identification personnelle doivent être sauvegardés régulièrement dans au moins deux endroits distincts, et il est impératif de ne pas oublier les mots de passe.

Les outils de sauvegarde sur cloud comme CrashPlan et Carbonite offrent toute une gamme d’options pour la sauvegarde des données et proposent parfois des solutions supplémentaires pour sauvegarder les données pendant des périodes plus longues afin de se prémunir contre tout effacement accidentel. Les outils de stockage cloud comme Box, Dropbox et Google Drive proposent des forfaits permettant de sauvegarder des données pendant plusieurs mois ou plus, et peuvent offrir une garantie contre la suppression involontaire de données si celle-ci est détectée avant la fin de la période de sauvegarde. Ces outils de stockage ne sont cependant pas de véritables outils de sauvegarde, car ils ne conservent pas les fichiers supprimés indéfiniment. Les serveurs des établissements disposent aussi parfois de dispositifs de sauvegarde des données, et il existe également des systèmes de sauvegarde au niveau des appareils. La sauvegarde des données sur un disque dur externe chiffré (stocké dans un endroit distinct des ordinateurs utilisés au quotidien) constitue une autre solution pour les environnements à faible connectivité.

Effacer des données

L’IRB ou le fournisseur de données peuvent décider si les données doivent être conservées ou détruites, et à quel moment. Les correspondances avec les informations d’identification personnelle doivent être effacées dès qu'elles ne sont plus nécessaires. Pour effacer complètement des données sensibles, il ne suffit pas de déplacer les fichiers dans la corbeille et de la vider. Il existe plusieurs logiciels qui permettent de le faire. Par exemple, le MIT publie des recommandations pour la suppression des données sensibles. Certains services informatiques proposent également une assistance pour la suppression et l’élimination des données de manière sécurisée. Pour les enquêtes sur papier, J-PAL recommande que les copies papier des pages de couverture contenant les informations d’identification personnelle, des questionnaires et des tableaux de correspondance des identifiants de l’étude soient détruites dans les 3 à 5 ans qui suivent la fin du projet (ou selon les engagements pris dans le protocole de l’IRB).10

Le fournisseur de données devra être assuré que tous les fichiers ont été supprimés de manière sécurisée et qu’aucune copie supplémentaire n’a été conservée. Pour documenter l’effacement des données, certains chercheurs font des captures d’écran du processus de suppression

Exemple de description d’un plan de sécurité des données

Les accords d’utilisation des données (DUA) et les IRB demandent souvent aux chercheurs de fournir une description de leurs procédures de sécurité et de destruction des données. De plus, ils ont parfois des exigences spécifiques concernant ces procédures en fonction du degré de sensibilité des données demandées.11 Cette section donne des exemples de descriptions de plans de gestion des données provenant de DUA approuvés. Ces descriptions sont fournies à titre d’information uniquement ; elles ne sont pas nécessairement exhaustives et ne s’appliquent pas à toutes les circonstances. Veuillez consulter le centre de soutien à la recherche, la bibliothèque ou le service informatique de votre université (et/ou de votre département) pour connaître les protocoles détaillés, obtenir si possible des modèles et savoir ce qui est faisable, nécessaire et suffisant dans votre contexte. Vous trouverez d’autres ressources externes sur la description des plans de sécurité des données dans la section « Ressources sur la sécurité des données » en bas de cette page.

Stockage sécurisé des données : Le département met à la disposition de ses étudiants et de ses professeurs un environnement informatique pour la recherche basé sur Unix/Linux. Les systèmes informatiques destinés à la recherche utilisent du matériel de niveau professionnel et sont gérés par une équipe dédiée de spécialistes en informatique. Le département exploite également des ressources supplémentaires mises à disposition par l’établissement de manière centralisée, telles que l’infrastructure réseau et des services professionnels en co-hébergement dans les datacenters de l’établissement. Le personnel informatique du département prend entièrement en charge les serveurs de recherche privés achetés par les professeurs. Cette prise en charge inclut la gestion des comptes, l’application de correctifs de sécurité, l’installation des logiciels et la surveillance de l’hôte. Des serveurs sécurisés seront utilisés pour le traitement et l’analyse des données. Tous les calculs et travaux d’analyse seront effectués exclusivement sur ces serveurs. Des autorisations seront définies au niveau des fichiers afin de restreindre l’accès aux données à l’équipe de recherche.

Toutes les données du projet seront stockées sur un périphérique de stockage en réseau (NAS). Un volume dédié sera créé sur ce périphérique NAS pour le stockage exclusif de toutes les données liées à ce projet de recherche. L’accès aux données de ce volume se fera via le protocole NFSv4 et sera limité aux hôtes et aux utilisateurs autorisés grâce à des listes d’hôtes basées sur les adresses IP et des identifiants de l’établissement. Les données de ce volume ne seront accessibles qu’aux utilisateurs authentifiés sur les serveurs du projet décrits ci-dessous. Les données seront sauvegardées sur un périphérique NAS secondaire auquel seul le personnel informatique aura accès.

L’ensemble du trafic réseau est chiffré à l’aide du protocole SSH2. Pour les connexions hors campus, un VPN offre un niveau supplémentaire de chiffrement et de restriction d’accès. Toutes les connexions au serveur nécessitent deux formes d’authentification, un mot de passe et une paire de clés SSH. Le protocole SSH Inactivity Timeout est utilisé pour définir le délai d’expiration des sessions

Dernière modification : mai 2023.

Ces ressources sont le fruit d’un travail collaboratif. Si vous constatez un dysfonctionnement, ou si vous souhaitez suggérer l’ajout de nouveaux contenus, veuillez remplir ce formulaire.

Ce document faisait initialement partie de la ressource « Utiliser des données administratives dans le cadre d’une évaluation aléatoire », publiée en 2015 par J-PAL Amérique du Nord. Nous tenons à remercier les contributeurs originaux de ce travail, ainsi que Patrick McNeal et James Turitto pour leurs commentaires et leurs conseils éclairés. Ce document a été relu et corrigé par Chloe Lesieur, et traduit de l’anglais par Marion Beaujard. Laurie Messenger a effectué la mise en forme du guide, des tableaux et des figures. Le présent travail a pu être réalisé grâce au soutien de la Fondation Alfred P. Sloan et de la Fondation Laura and John Arnold. Toute erreur est de notre fait.

Merci d’envoyer vos commentaires, questions ou réactions à l’adresse suivante : [email protected].

Avertissement :

Ce document est fourni à titre d’information uniquement. Toute information de nature juridique contenue dans ce document a pour seul objectif de donner un aperçu général de la question et non de fournir des conseils juridiques spécifiques. L’utilisation de ces informations n’instaure en aucun cas une relation avocat-client entre vous et le MIT. Les informations contenues dans ce document ne sauraient se substituer aux conseils juridiques compétents prodigués par un avocat professionnel agréé en fonction de votre situation.

Pour une analyse, voir « The Re-Identification Of Anonymous People With Big Data ». Dans « Estimating the success of re-identifications in incomplete datasets using generative models », Rocher et al. (2019) démontrent qu’il est possible de réidentifier les individus même dans des jeux de données anonymisées avec un échantillon très grand.

La politique de sécurité des données de recherche de Harvard peut être consultée dans son intégralité ici.

Par exemple, Bonnie Yankaskas, professeure de radiologie à l’université de Caroline du Nord à Chapel Hill, a subi des répercussions juridiques et professionnelles après la découverte d’une faille de sécurité dans une étude médicale qu’elle dirigeait, et ce alors qu’elle n’avait pas connaissance de la faille et qu’aucun dommage aux participants n’a été identifié. Voir l’article du Chronicle of Higher Education article pour plus de détails, ainsi qu’un communiqué de presse conjoint de l’université et de professeure Yankaskas qui décrit l’issue de l’incident.

La séparation et le chiffrement des éléments d’identification sont l’une des exigences minimales énoncées dans la check-list des protocoles de recherche de J-PAL.

Pour donner un exemple, des données sur les courses de taxis à New York ont été publiées, dans lesquelles les numéros de permis de conduire et de licence avaient été rendus illisibles grâce à un procédé standard de hachage cryptographique. Ces données ont été désanonymisées en l’espace de deux heures. Pour plus d’informations sur ce cas, voir Goadin 2014 et Panduragan 2014. Un hachage cryptographique associé à une clé secrète peut constituer une option plus sûre, mais le mieux reste d’utiliser un nombre entièrement aléatoire, sans aucun lien avec les éléments d’identification.

Reportez-vous aux instructions de Google pour savoir comment localiser, verrouiller ou effacer un appareil Android égaré. Les fabricants d’appareils individuels (comme Samsung) ont parfois leur propre procédure, que vous pouvez activer séparément. En règle générale, certaines démarches sont nécessaires lors de la configuration initiale des tablettes de l’étude, comme la création d’un compte pour chaque appareil. Ces démarches doivent être approuvées par l’IRB.

C’est ce que recommande Innovations for Poverty Action (depuis novembre 2015) pour le chiffrement des fichiers, conformément aux bonnes pratiques d’IPA en matière de gestion des données et du code.

J-PAL recommande VeraCrypt et a mis à jour la commande Truecrypt sous Stata pour qu’elle fonctionne avec VeraCrypt. J-PAL a également développé un guide d’installation et d’utilisation du logiciel VeraCrypt.

Le MIT recommande actuellement PGP-Zip pour le chiffrement au niveau des fichiers (depuis mai 2018).

En plus de ces éléments, les DUA peuvent imposer aux chercheurs de fournir un protocole expérimental, et contiennent généralement des dispositions relatives à la sécurité des données, à la confidentialité, à l’examen par un IRB ou un comité de protection de la vie privée, à la rediffusion des données et à la répartition des responsabilités entre le fournisseur de données et l’établissement d'origine du chercheur.

Additional Resources

La politique de sécurité des données de recherche de l’université de Harvard classe les données selon cinq niveaux de sensibilité et définit les exigences en matière de sécurité des données correspondant à chacun de ces niveaux.

Guidance Regarding Methods for De-identification of Protected Health Information in Accordance with the Health Insurance Portability and Accountability Act (HIPAA) Privacy Rule, Ministère américain de la santé et des services sociaux.

« How Can Covered Entities Use and Disclose Protected Health Information for Research and Comply with the Privacy Rule? », National Institutes of Health

45 CFR 164.514 – Décrit la norme HIPAA pour la désidentification des données de santé protégées (texte officiel).

Ressources de J-PAL sur le travail avec des données, incluant des ressources supplémentaires sur VeraCrypt.

Pour les chercheurs du MIT, le Dr Micah Altman, directeur de la recherche des bibliothèques du MIT, donne régulièrement des conférences sur la gestion des données confidentielles.

- Le Département des systèmes d’information et des technologies du MIT propose des ressources sur les thèmes suivants :

- Sécurité informatique

- Secure Shell File Transfer Protocol: SecureFX

- Chiffrement (y compris des recommandations de logiciels) et chiffrement complet du disque

- Supprimer les données sensibles

- Le Département des systèmes d’information et des technologies du MIT propose des ressources sur les thèmes suivants :

La politique de sécurité des données de recherche (HRDSP) de l’université de Harvard est une excellente ressource pour la classification des différents niveaux de sécurité. Elle donne également des exemples d’exigences en matière de sécurité.

Research Protocol Checklist, J-PAL

Best Practices for Data and Code Management, IPA

- J-PAL et IPA fournissent des exemples de code pour :

- Rechercher des informations d’identification personnelle avec Stata et avec R

- Séparer les informations d’identification personnelle des autres données avec Stata

- Utiliser VeraCrypt sous Stata

- J-PAL et IPA fournissent des exemples de code pour :

L’article du National Institute of Standards and Technology (NIST) sur la désidentification des données personnelles, également expliqué dans sa présentation sur la désidentification des données.

Les directives révisées du National Institute of Standards and Technology (NIST) concernant les mots de passe, expliquées dans des termes plus simples dans un article de blog de l’équipe du NIST.

Plusieurs établissements proposent des conseils pour l’élaboration d’un plan de sécurité des données et la description de ce plan dans le cadre d’une demande de subvention ou d’un accord d'utilisation des données. Parmi ces ressources, citons notamment :

- Le cadre pour la création d’un plan de gestion des données et les lignes directrices pour des plans de gestion des données efficaces de l’Inter-university Consortium for Political and Social Research (ICPSR).

- Le guide des bibliothèques du MIT sur la rédaction d’un plan de gestion des données.

- Les exemples de plans de gestion des données des bibliothèques de la NC State University.

- La ressource de la Rice Research Data Team sur l’élaboration d’un plan de gestion des données et les outils pour les plans de gestion des données.

- Les outils et ressources du Carolina Population Center de l’UNC sur les plans de sécurité pour les données à usage restreint.

- Le Data Management Plan Tool (DMPTool) du Curation Center de l’Université de Californie permet aux utilisateurs de structurer leur plan de gestion des données à partir de modèles, notamment pour respecter les exigences des organismes de financement. Cette ressource est accessible sur abonnement. Nous vous invitons à consulter la liste des participants au DMP pour savoir si votre université ou votre institution a déjà ouvert une session de connexion institutionnelle.

References

Berlee, Anna. 2015. “Using NYC Taxi Data to identify Muslim taxi drivers.” The Interdisciplinary Internet Institute (blog). Consulté le 30 novembre 2015.

Goodin, Dan. 2014. “Poorly anonymized logs reveal NYC cab drivers’ detailed whereabouts.” Ars Technica.

Consulté le 30 novembre 2015. http://arstechnica.com/tech-policy/2014/06/poorly-anonymized-logs-reveal-nyc-cab-drivers-detailed-whereabouts/.

Mangan, Katherine. 2010. “Chapel Hill Researcher Fights Demotion after Security Breach.” Chronicle of Higher Education. Consulté le 7 mars 2018. https://www.chronicle.com/article/chapel-hill-researcher-fights/124821/.

Narayanan, Arvind, and Vitaly Shmatikov. 2008. “Robust De-Anonymization of Large Sparse Datasets.” presented at the Proceedings of 29th IEEE Symposium on Security and Privacy, Oakland, CA, May. http://www.cs.utexas.edu/~shmat/shmat_oak08netflix.pdf/.

Pandurangan, Vijay. 2014. “On Taxis and Rainbows: Lessons from NYC’s improperly anonymized taxi logs.” Medium. Consulté le 30 novembre 2015. https://medium.com/@vijayp/of-taxis-and-rainbows-f6bc289679a1/.