Navigating hospital Institutional Review Boards (IRBs) when conducting US health care delivery research

Summary

This resource provides guidance to avoid challenges when partnering with a hospital to implement a randomized evaluation and working with the hospital's institutional review board (IRB), which may be more familiar with medical research than social science evaluations. Conducting randomized evaluations in these settings may involve logistical considerations that researchers should be aware of before embarking on their experiment. Topics include how to write a protocol to avoid confusion, objections, and delay by taking pains to thoroughly explain a study design and demonstrate thoughtful approaches to randomization, risks and benefits, and how research impacts important hospital constituencies like patients, providers, and vulnerable groups. The resource also addresses how to structure IRB review when work involves multiple institutions or when research intersects with quality improvement (QI) in order to reduce concerns about which institution has responsibility for a study and to minimize administrative burden over the length of a study. For a more general introduction to human subjects research regulations and IRBs, including responding to common concerns of IRBs not addressed here, please first consult the resource on institutional review board proposals.

The challenge

Randomized evaluations, also known as randomized controlled trials (RCTs), are increasingly being used to study important questions in health care delivery, although the methodology is still not as commonly used in the social sciences as it is in studies of drugs and other medical interventions (Finkelstein 2020; Finkelstein and Taubman 2015). Economists and other social scientists may seek to partner with hospitals to conduct randomized evaluations of health care delivery interventions. However, compared to conducting research at their home institutions, it may seem as if some hospital IRBs may have a more lengthy process for reviewing social science RCTs. While all IRBs follow the guidelines set forth in the Common Rule, differences in norms and expectations between disciplines may complicate reviews and require clear communication strategies.

Lack of familiarity with social science RCTs

Hospital IRBs, accustomed to reviewing randomized evaluations in the context of clinical trials, may review social science RCTs as if they were comparatively higher risk clinical trials, while researchers may feel that social science RCTs are generally less risky. These IRBs may be more accustomed to reviewing clinical trials, research involving drugs or devices, or more intensive behavioral interventions. Thus, a protocol that mentions randomization may prompt safety concerns and lead to review processes required for trials that demand more scrutiny and risk mitigation.

Randomization

Randomization invites scrutiny on decisions about who receives an intervention, as well as scrutiny of the intervention itself. This can cause a disconnect for the researcher when trying to justify the research, or trigger assumptions that reviewers may have regarding ethics. Is the researcher denying someone something they should be able to receive? Unlike a drug or device that requires testing, it may not be clear to a hospital IRB why an intervention, for instance, to increase social support or to provide feedback on prescribing behavior to physicians cannot be applied to everyone.

Researchers being external to the reviewing IRB organization

Although not a challenge inherent to the field of health care delivery, partnering with a hospital to conduct research may involve researchers interacting with a new IRB. This presents challenges when IRBs are unaware of, or unable to independently assess the expertise of researchers, may not understand the division of labor between investigators at the hospital and elsewhere, or may not be clear on where hospital responsibility for a project starts and ends. This may also pose logistical challenges if it limits who has access to IRB software systems.

Despite these challenges, researchers affiliated with J-PAL North America have successfully partnered with hospitals to conduct randomized evaluations.

- In Clinical Decision Support for High-cost Imaging: A Randomized Clinical Trial, J-PAL affiliated researchers partnered with Aurora Health Care, a large health care provider in Wisconsin and Illinois to conduct a large-scale randomized evaluation of a clinical decision support (CDS) system on the ordering of high-cost diagnostic imaging by healthcare providers. Providers were randomized to receive CDS in the form of an alert that would appear within the order entry system when they ordered scans that met certain criteria. Aurora Health Care’s IRB reviewed the study and approved a waiver of informed consent for patients, the details of which are well documented in the published paper.

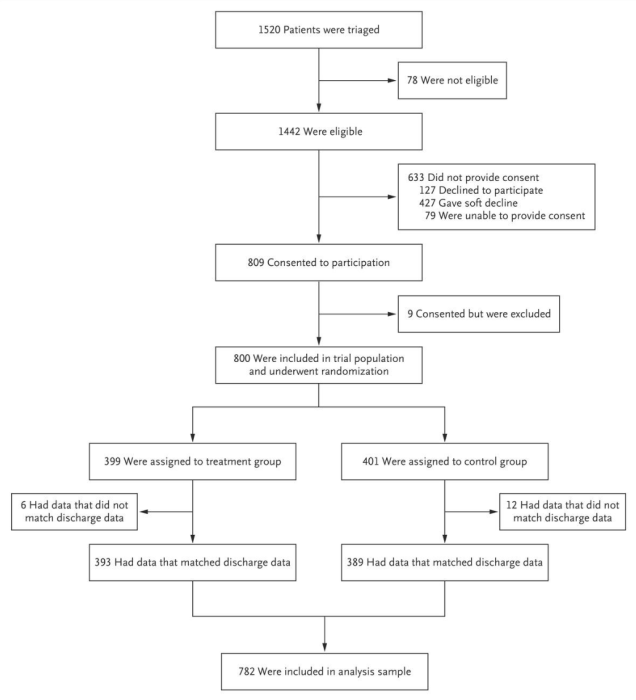

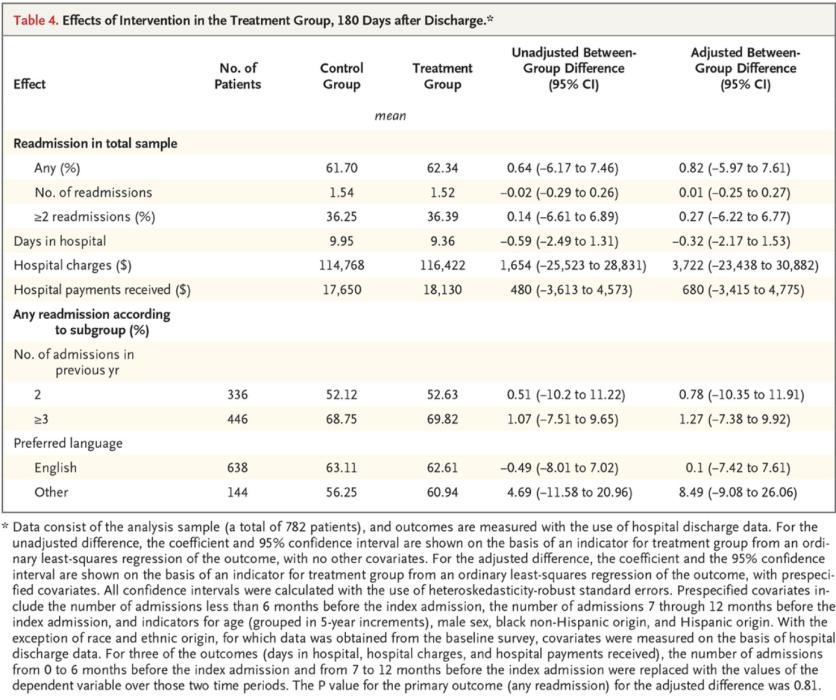

- In Health Care Hotspotting, J-PAL affiliated researchers partnered with the Camden Coalition of Healthcare Providers in New Jersey and several Camden area hospitals (each with their own IRB) to evaluate the impact of a care transition program that provided assistance to high-cost, high-need patients with frequent hospitalizations and complex social needs, known as “super-utilizers.” The study recruited and randomized patients while in the hospital, although the bulk of the intervention was delivered outside of the hospital after discharge. Each recruiting hospital IRB reviewed the study.

- In Prescribing Food as Medicine among Individuals Experiencing Diabetes and Food Insecurity, J-PAL affiliated researchers partnered with the Geisinger Health System in Pennsylvania to evaluate the impact of the Fresh Food Farmacy (FFF) “food-as-medicine” program on clinical outcomes and health care utilization for patients with diabetes and food insecurity. A reliance agreement was established between MIT and the Geisinger Clinic, with the Geisinger Clinic’s IRB serving as the reviewing IRB.

- In Encouraging Abstinence Behavior in a Drug Epidemic: Does Age Matter? J-PAL affiliated researchers partnered with DynamiCare Health and Advocate Aurora Health to evaluate whether an app-based abstinence incentive program through a mobile application is effective for older adults with opioid use disorders. Advocate Aurora Health reviewed the study, and a reliance agreement was not needed, as it was determined that the co-PIs at the University of California, Santa Cruz and the University of Chicago were not involved in human subjects research.

The following considerations are important to ensure positive relationships, faster reviews, and increased likelihood of approval when working with hospital IRBs:

Considerations for the institutional arrangement

Partner with a PI based at the IRB's institution

RCTs generally involve partnerships between investigators and implementing partners. Hospitals differ from typical implementing partners in that they are research institutions with their own IRBs. Consequently, hospital partnerships often require partnership with a researcher based at that hospital. Therefore, if you want to work with a hospital to conduct your research, plan to partner with a PI based at that hospital. Beyond the substantive knowledge and expertise that a hospital researcher brings, there may be practical benefits for the IRB process. This includes both institutional knowledge and the mundane task of clicking submit on an internal IRB website. Procedures for approving external researchers vary from hospital to hospital and having an internal champion in this process can be invaluable.

Building a relationship with a local PI as your co-investigator can be beneficial for a number of reasons: utilizing content expertise during the research design, addressing the alignment of the research goals with the mission, interest, and needs of the hospital, and also in navigating IRB logistics. A local PI may already have knowledge of nuances to consider when designing a protocol and submitting for IRB review.

- In the evaluation of clinical decision support, J-PAL and MIT-based researchers partnered with Aurora-based PI, Dr. Sarah Reimer, who played a key role in getting the study approved. Aurora requires all research activities to obtain Research Administrative Preauthorization (RAP) before submitting the proposal to the IRB for approval. Dr. Reimer ensured the research design made it through the formal steps of both reviews. She also helped with the approval process allowing the external J-PAL investigators to participate in the research, which included a memo sent to Aurora’s IRB explaining their qualifications, experience, and role on the project.

- Challenges in collaboration are always a possibility. In Health Care Hotspotting, researchers initially partnered with Dr. Jeffrey Brenner, the founder of the Camden Coalition and a clinician at the local hospitals. When Dr. Brenner left the Coalition and the research project, the research team needed to find a hospital-based investigator in order to continue managing administrative tasks like submitting IRB amendments.

Decide which IRB should review

Multi-site research studies (including those with one implementation site but researchers spread across institutions, if all researchers are engaged in human subjects research) can generally opt to have a single IRB review the study with the other IRBs relying on the main IRB. In fact, this is required for NIH-funded multi-site trials. Implementation outside of the NIH-required context is more varied.

All else equal, it may be easier to submit and manage an IRB protocol at one’s home university, with partnering hospitals ceding review. However, not all institutions will agree to cede review. This seems to be the case particularly when most activities will take place at another institution (e.g., you are the lead PI based at a university but recruitment is taking place at a hospital and the hospital IRB does not want to cede review). Sometimes hospitals may require their own independent IRB review, which results in the same protocol being reviewed at two (or more) institutions. Understanding each investigator’s role and level of involvement in the research, along with the research activities happening at each site is crucial when thinking about a plan to submit to the IRB. Consult the IRBs and talk to your fellow investigators about how to proceed with submitting to the IRB and the options for single IRB review. For long-term studies, consider how study team turnover and the possibility of researchers changing institutions will affect these decisions.

- In Health Care Hotspotting, all four recruiting hospitals required their own IRB review. MIT, where most researchers were based, ceded review to Cooper University Hospital, the primary hospital system where most recruitment occurred. Cooper has since developed standard operating procedures for working with external researchers and determining who reviews. Other institutions may have similar resources. Maintaining separate approvals proved labor intensive for research and implementing partner staff.

- Reliance agreements can be easier with fewer institutions. In Prescribing Food as Medicine among Individuals Experiencing Diabetes and Food Insecurity, a reliance agreement was established between MIT (where the J-PAL affiliated researcher was based) and the Geisinger Health System. MIT ceded to Geisinger, since recruitment and the intervention took place at Geisinger affiliated clinics.

We recommend single IRB review whenever possible, because a single IRB will drastically reduce the effort required for long term compliance, such as submitting amendments and continuing reviews. While setting up single IRB review initially may not be straightforward, doing so will pay dividends over the length of the project.

If a researcher at a university wants to work with a co-investigator at a hospital and have only one IRB oversee the research, a reliance agreement or institutional authorization agreement (IAA) will be required by the IRBs. Researchers select one IRB as the ‘reviewing IRB’, which provides all IRB oversight responsibilities. The other institutions sign the agreement stating that they agree to act as ‘relying institutions’ and their role is to provide any conflicts of interest, the qualifications of their study team members, and local context if applicable but otherwise cede review.

SMART IRB is an online platform aimed at simplifying reliance agreements, funded through the NIH National Center for Advancing Translational Sciences. NIH now requires a single reviewing IRB for NIH-funded multisite research. Many institutions use SMART IRB, however some may use their own paper based systems or use a combination of both (e.g., SMART IRB for medical studies that require it, paper forms for social science experiments). An example of a reliance agreement form can be found here. The process of getting forms signed and sent back and forth between institutions can take time. If you are planning to collaborate with a researcher at another institution, plan ahead by looking into whether they have SMART IRB and talk to other researchers about their experiences with obtaining a reliance agreement via traditional forms.

Anticipate roadblocks to reliance agreements to save yourself time in the future and avoid potential compliance issues. Explain your roles and responsibilities in the protocol, check to see if your institution(s) use SMART IRB, discuss with your co-investigator(s) which institution should be the relying institution, and talk to your IRB if you are unsure whether a reliance agreement will be needed.

Understand who is “engaged” in research

It is fairly common for research teams to be spread across multiple universities; however, not all universities may be considered engaged in human subjects research. Considering which institutions must approve the research can cut down on the number of approvals needed, even in cases of single IRB review.

An institution is engaged in research if an investigator at that institution is substantively involved in human subjects research. Broadly, “engagement” involves activities that merit professional recognition (e.g., study design) or varying levels of interaction with human subjects, including direct interaction with subjects (e.g., consent, administering questionnaires) or interaction with identifiable private information (e.g., analysis of data provided by another institution). If you have co-investigators who are not involved in research activities with subjects and do not need access to the data, even if they are collaborators for authorship and publication purposes, reliance may not be necessary (See Engagement of Institutions in Human Subjects Research, Section B 11). To learn more about criteria for engagement, please review guidance from the Department of Health & Human Services. When in doubt, an IRB may issue a determination that an investigator is “not engaged” in human subjects research.

- In Treatment Choice and Outcomes for End Stage Renal Disease, the study team was based at MIT, Harvard, and Stanford. Data access was restricted such that only the MIT-based team could access individual data. The study design, analysis, and paper writing were collaborative efforts, but the IRBs determined that only MIT was engaged in human subjects research.

- One J-PAL affiliated researcher experienced a weeks-long exchange when trying to set up a reliance agreement between their academic institution and their co-PI’s hospital. As co-PIs they designed the research protocol together. The plan was for recruitment and enrollment of patients into the study to take place at the hospital where the co-PI was situated and for our affiliate to lead the data analyses. The affiliate initiated the reliance agreement, but because the study was taking place at the hospital and our affiliate would not actually be involved in any of the direct research activities (e.g., recruitment, enrollment), their IRB questioned whether their level of involvement even qualified as human subjects research and subsequently did not see the need for a reliance agreement. Our affiliate provided their IRB with justification of their level of involvement in the study design and planned data analyses. An academic affiliation was also set up for the J-PAL affiliate at the co-PI’s hospital for data access purposes. Because the study received federal funding, the investigators emphasized the need for compliance with the NIH’s single IRB policy. Ultimately, the reliance agreement was approved.

Determine whether your study is Quality Improvement (QI)

Quality improvement (QI) is focused on evaluating and identifying ways to improve processes or procedures internally at an organization. Because the focus is more local, the goals of QI differ from research, which is defined as “a systematic investigation… to develop or contribute to generalizable knowledge” (45 CFR 46.102(2)). Instead, QI has purposes that are typically clinical or administrative. QI projects are not human subjects research and are not subject to IRB review. However, hospitals typically have an oversight process for QI work, including making a determination that a project is QI and thus not human subjects research. If you are doing QI, it is crucial to follow the local site’s procedures, so make sure to discuss your work with the IRB or, if separate, the office responsible for QI determinations.

Quality improvement efforts are common in health care. In some cases, researchers may be able to distinguish between the QI effort and the work to evaluate it. In such work, the QI would receive a determination of “not human subjects research” and would not be overseen by the IRB, whereas a non-QI evaluation would be considered research and would receive oversight from the IRB. If the evaluation only involved retrospective data analysis, it might be eligible for simplified IRB review processes, such as exemption or expedited review.

Quality improvement projects are often published as academic articles. The intent to publish does not mean your QI project fits the regulatory definition of research (45 CFR 46.102(d)) and should not, on its own, hinder you from pursuing a QI project. If your QI project also has a research purpose, then regulations for the protection of human subjects in research may apply. In this case, you should discuss with the IRB and/or the office responsible for QI determinations. The OHRP has an FAQ page on Quality Improvement Activities that can help you get a better understanding of the differences between QI and research.

Understanding QI matters because hospital-based partners may feel that a project does not require IRB review. While a project may be intended to evaluate and identify ways to improve processes or procedures, there may be research elements embedded in the design. If so, then your project must be reviewed by an IRB, per federal regulations. If you are unsure if your project can be classified as QI, human subjects research, or both, talk to your IRB and/or the office that makes QI determinations.

Additionally, there may be funder requirements to be mindful of when it comes to IRB review. For example, J-PAL’s Research Protocols require PIs to obtain IRB approval, exemption, or a non-human subjects research determination.

- The Rapid Randomized Controlled Trial Lab at NYU Langone, run by Dr. Leora Horwitz, does exclusively QI, because the lab prioritizes the speed of projects. By limiting the projects to just QI and certain types of evaluations, the lab is able to complete projects within a timespan of weeks. Researchers have investigated non-clinical improvements to the health system, such as improving post-discharge telephone follow-up.

- Lessons from Langone: QI projects may not need IRB review, but that doesn’t mean ethical considerations should not be taken seriously. Before getting started, the researchers discussed the approach with their IRB, who then determined that their projects qualified as QI. Two hallmarks of QI work include: no collection of personal identifiers, since this is generally unnecessary in evaluation of an effect, and the prioritization of oversubscribed interventions to avoid denying patients access to a potentially beneficial program (Horowitz, et al 2019).

Quality improvement should not be undertaken to avoid ethical review of research. As the researcher, it is your obligation to be honest and thorough in designing your protocol and assessing the roles human subjects will play and how individuals may be affected.

Do not outsource your ethics! Talk to your IRB about your options for review if you think your protocol involves both QI and research activities. It is always possible to submit a protocol, which may lead to a determination that a project is not human subjects research, or exempt from review.

Consider splitting up your protocol

A research study may involve several components, some of which may qualify for exemption or expedited review while others will not. For example, researchers may be interested in accessing historical data (retrospective chart review, defined below) to inform the design of a prospective evaluation. In this case, the research using historical data could be submitted to the IRB as one protocol, likely qualifying for an exemption, while the prospective evaluation could later be submitted as a separate protocol. Removing some elements of the project from the requirements of ongoing IRB review may be administratively beneficial and doing so allows some of the research to get approved and commence before all details of an evaluation are finalized. Similar strategies may be used when projects involve elements of QI. An IRB may also request that projects be split into separate components.

Considerations for writing the protocol

The following sections contain guidance for how to write the research protocol to avoid confusion and maintain a positive relationship with an IRB, but these points also relate to important design considerations for how to design an ethical and feasible randomized evaluation that fits a particular context. For more guidance on these points from a research design perspective consult the resource on randomization and the related download on real-world challenges to randomization and their solutions.

Use clear language and default to overexplaining

IRB members reviewing your protocol are likely not experts in your field. Explain your project so that someone with a different background can understand what you are proposing. If you have co-investigators who have previously submitted to hospital IRBs, consult with them on developing the language for your protocol. You should also consult implementing partners in order to sufficiently describe the details of your intervention. Because IRBs only know as much as you tell them, it is important to be diligent when explaining your research. By being thorough and anticipating questions in the protocol, you are leaving less ground for confusion and questions that may arise and elongate the process of review and approval. Questions from the IRB aren’t necessarily bad or indicative that you won’t receive approval, however providing ample explanation is a way to proactively answer questions that may come up during review, therefore potentially saving time when waiting to hear back about the determination.

Be mindful of jargon and specific terminology

The IRB may imbue certain words with particular meaning.

- “Preparatory work,” for example, may be interpreted as a pilot or feasibility study and the IRB may instruct you to modify your application and specify that your study is a pilot in the protocol and informed consent. If you are not actually conducting a pilot, this can lead to confusion and delay.

- Similarly, mentioning “algorithms” may suggest an investigation of new algorithms to determine a patient’s treatment, prompting an IRB to review your work like it was designed to test a medical device.

- In the medical world, IRBs often refer to research with historical data as retrospective chart review. Chart review is research requiring only administrative data — materials, such as medical records, collected for non research purposes — and no contact with subjects. Chart reviews can be retrospective or prospective, although many IRBs will grant exemptions only to retrospective chart reviews. Prospective research raises the question of whether subjects should be consented, and prospective data collection may require more identifying information to extract data. Proposals therefore require additional scrutiny.

- If working only with data where the “identity of the human subjects cannot readily be ascertained” (including limited data sets subject to a DUA) these studies can be determined exempt under category 4(ii) (45 CFR 46.104). If chart review involves data that is identified, this still qualifies for expedited review (category #5) if minimal risk. In this case researchers are trading off a longer IRB process for greater freedom with how to code identifiers.

IRBs may provide glossaries or lists of definitions that are helpful to consult when writing a protocol. NIA also maintains a glossary of clinical research terms.

Carefully consider and explain the randomization approach.

Typical justifications for randomization are oversubscription (i.e., resource constraints or scarcity), where demand exceeds supply, or equipoise. Randomization given oversubscription ensures a fair allocation of a limited resource. Equipoise is defined as a “state of genuine uncertainty on the relative value of two approaches being compared.” In this case, randomization for the sake of generating new knowledge is ethically sound.

Careful explanation of the motivations behind randomization and the approach are crucial in making sure that both the IRB and participants understand they are not being deprived of care that they would normally be entitled to.

- In Health Care Hotspotting, eligible patients were consented and randomized into an intervention group that was enrolled in the Camden Core Model and a control group that received the usual standard of care (a printed discharge plan). Great care was taken to explain to patients that no one would be denied treatment that they were otherwise entitled to as a result of participating or not participating in the study. Recruiters emphasized that participation in the study would not affect their care at the hospital or from their regular doctors. However, the only way to access the Camden program was through the study and randomization. More detail on this intake process is in the resource designing intake and consent processes in health care contexts.

Different design decisions may reduce concerns about withholding potentially valuable treatments. Strategies such as phasing in the intervention, so all units are eventually treated but in a random order; randomizing encouragement to take up the intervention rather than randomizing the intervention itself; and randomizing among the newly eligible when expanding eligibility can all be considered during study design. For more details on these strategies, refer to this resource on randomization.

Explain risks and benefits

Behavioral interventions may not pose the risk of physical harm that drug or medical interventions might, however social scientists still must consider the risks participants face. Using data (whether administrative or collected by researchers) involves the risk of loss of privacy and confidentiality, including the possibility of reidentification if anonymized data is linked back to individuals. The consequences of these risks depend on the data content, but health data is generally considered sensitive. Risks beyond data-related ones do exist and must be considered. Are there physical, psychological, or financial risks that participants may be subjected to?

Do not minimize the appearance of risks. Studies are judged on whether risks are commensurate with the expected benefits. They need not be risk free. Being thoughtful in the consideration of risks is more effective than asserting their absence.

Don’t outsource your ethics; as the researcher, you have an obligation to consider risks and whether they are appropriate for your study. Researchers should ensure that they consider a study to be ethical before they attempt to make the case to someone else like an IRB. Many but not all behavioral studies will be minimal risk. Minimal risk does not mean no risk, but rather a level of risk comparable to what one would face in everyday life (45 CFR 46. 102(i)). Such risks, for example, may include taking medication that has the risk of side effects. Minimal risk studies may be eligible for expedited review, as detailed in this guidance set forth by the Office for Human Research Protections (OHRP). If you do not plan to use a medical drug or device intervention in your research, clearly distinguishing your study from medical research may be helpful in ensuring that your research is reviewed commensurate with risks. If you have a benign behavioral intervention* that is no more than minimal risk to participants, use those terms and justify your reasoning (*please see section 8. in Attachment B - Recommendations on Benign Behavioral Intervention for a more detailed definition of what constitutes a benign behavioral intervention). This will put your study in a shared framework of understanding. Check your IRB’s website for a definitions page and contact your IRB if you have any clarifying questions.

- In Encouraging Abstinence Behavior in a Drug Epidemic: Does Age Matter?, the intervention is administered using an app for a mobile device. Through the app, participants will receive varying incentive amounts for drug negative saliva tests. Participants must have a smartphone with a data plan in order to run the application. There is a potential risk of participants incurring extra charges on their phone plan if they exceed their data limit while uploading videos to the app. To address this risk, researchers made sure that the option in the app settings to “Upload only over Wi-Fi” was known to participants so that they can upload their videos without using mobile data and risking extra charges.

There is no requirement that your study must pose no more than minimal risk. Studies that are greater than minimal risk will face full institutional review board review. You must also explain the measures you plan to take to ensure that protections are put into place for the safety and best interests of the participants.

All studies must report adverse or unanticipated events or risks. An example of an adverse event reporting form can be found here (under “Protocol Event Reporting Form”). However, for studies that are greater than minimal risk, your IRB may ask you to propose additional safety monitoring measures. It is possible that hospital IRBs with a history of reviewing proposals for clinical trials are more accustomed to the need for safety monitoring plans and may expect them even from social science trials that do not appear risky.

- In Health Care Hotspotting, where the only risk was a breach of data confidentiality, weekly project meetings involved a review of data management procedures with staff as well as discussion of any adverse events. The hospital IRB submission prompted researchers to detail these plans.

Additionally, depending on the level of risk and funder requirements, you may encounter the need for a Data and Safety Monitoring Board (DSMB) to review your study. A DSMB is a committee of independent members responsible for reviewing study materials and data to ensure the safety of human subjects and validity and integrity of data. National Institutes of Health (NIH) policy requires DSMB review and possible oversight for multi-site clinical trials and Phase III clinical trials funded through the NIH and its Institutes and Centers. The policy states that monitoring must be commensurate with the risks, size, and complexity of the trial. For example, a DSMB may review your study if it involves a vulnerable population or if it has a particularly large sample size. The DSMB will make recommendations to ensure the safety of your subjects or possibly set forth a frequency for reporting on safety monitoring (e.g., submit a safety report every six months). Be sure to check your funder’s requirements and talk to your IRB about safety monitoring options if you think your study may be greater than minimal risk.

Address the inclusion of vulnerable populations

The Common Rule (45 CFR 46 Subparts B, C, and D) requires additional scrutiny of research on vulnerable populations, specifically pregnant people, prisoners, and children. This stems from concern around additional risks to the person and fetus (in the case of pregnancy) and the ability of the individual to voluntarily consent to research (in the case of those with diminished autonomy or comprehension).

The inclusion of vulnerable populations should not deter you from conducting research, whether they are the population of interest, a subgroup, or included incidentally. Although it may seem easier to exclude vulnerable individuals, relevant statuses such as pregnancy may be unknown to the researcher and unnecessary exclusions may limit the generalizability of findings. In addition to generalizability of findings, diversifying your research populations is important to avoid overburdening the same groups of participants. If you are considering the inclusion of vulnerable populations in your research, brainstorm safeguards that can be implemented to ensure vulnerable populations can safely participate in the research if there are additional risks. In some cases (including if funders require), you may need a Data Safety and Monitoring Board (DSMB) to review your research and provide safety recommendations.

- One J-PAL affiliated researcher proposed a study with pregnant women as the population of interest. Although the researcher was working with a vulnerable population, the study itself was a benign behavioral intervention involving text messages and reminders of upcoming appointments. Thorough explanation of the research activities and how the vulnerable population faced no more than minimal risk led to the study being approved without a lengthy review process.

-

In the evaluation of clinical decision support, researchers argued that it was important to understand the impact of CDS on advanced imaging ordering by providers for all patients, including vulnerable populations. Although not explicitly targeted for the study, vulnerable populations should not be excluded from having this benefit. Furthermore, the researchers noted that identifying vulnerable populations for the purposes of exclusion would result in an unnecessary loss of their privacy.

Consider the role of patients even if they are not the research subjects

In a hospital setting, patient considerations will be paramount, even if they are not the direct subject of the intervention, unit of randomization, or unit of analysis. It therefore behooves researchers to address them directly, even if they are not research subjects.

- In the evaluation of clinical decision support (CDS), healthcare providers were randomly assigned to receive the CDS. The provider was the unit of randomization and analysis, and the outcome of interest was the appropriateness of images ordered. The IRB considered potential risks to patients in terms of data use and patient welfare. The researchers proposed and the IRB approved a waiver of informed consent based on the following justification: the CDS could be overridden, ensuring providers maintained professional discretion in which images to order, which contributed to a determination of minimal risk to the patients. Further, obtaining consent from patients would not be practicable, and may have increased risk by requiring the collection of personally identifiable information that was not otherwise required for the study. You can learn more about activities requiring consent and waivers in this research resource.

These considerations boil down to communication of details and proper explanation in the protocol.

Conclusion

While social scientists may be initially hesitant, collaborating with hospitals to conduct randomized evaluations is a great way to increase the number of RCTs conducted in health care delivery research. Understanding levels of risk, writing a thorough protocol, strategizing for effective collaboration, and considering the role of quality improvement (QI) are important when planning for a submission to a hospital IRB.

Acknowledgments: This resource was a collaborative effort that would not have been possible without the help of everyone involved. A huge amount of thanks is owed to several folks. Thank you to the contributors, Laura Feeney, Jesse Gubb, and Sarah Margolis; thank you to Adam Sacarny for taking the time to share your experiences and expertise; and thank you to everyone who reviewed this resource: Catherine Darrow, Laura Feeney, Amy Finkelstein, Jesse Gubb, Sarah Margolis, and Adam Sacarny.

Creation of this resource was supported by the National Institute On Aging of the National Institutes of Health under Award Number P30AG064190. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

- Research Resource on Institutional Review Boards (IRBs)

- Research Resource on Ethical conduct of randomized evaluations

- Research Resource on Define intake and consent process

- The Common Rule - 45 CFR 46

- The Belmont Report

- OHRP guidance on considerations for regulatory issues with cluster randomized controlled trials

- SACHRP Recommendations: Attachment B - Recommendations on Benign Behavioral Intervention

- OHRP guidance on engagement of institutions in human subjects research

- OHRP FAQs on quality improvement activities

Abramowicz, Michel, and Ariane Szafarz. 2020. “Ethics of RCT: Should Economists Care about Equipoise?.” In Florent Bédécarrats, Isabelle Guérin, and François Roubaud (eds), Randomized Control Trials in the Field of Development: A Critical Perspective. https://doi.org/10.1093/oso/9780198865360.003.0012

Finkelstein, Amy. 2020. “A Strategy for Improving U.S. Health Care Delivery — Conducting More Randomized, Controlled Trials.” The New England Journal of Medicine 382 (16): 1485–88. https://doi.org/10.1056/nejmp1915762.

Finkelstein, Amy, and Sarah Taubman. 2015. “Randomize Evaluations to Improve Health Care Delivery.” Science 347 (6223): 720–22. https://doi.org/10.1126/science.aaa2362.

Horwitz, Leora I., Masha Kuznetsova, and Simon Jones. 2019. “Creating a Learning Health System through Rapid-Cycle, Randomized Testing.” The New England Journal of Medicine 381 (12): 1175–79. https://doi.org/10.1056/nejmsb1900856.