Questionnaire piloting

Summary

Piloting is the testing, refining, and re-testing of survey instruments in the field to make them ready for your full survey. It is a vital step to ensure that you understand how your survey works in the field, that you are collecting accurate, appropriate data. It also helps in the process of designing staff training for the final launch. This section focuses on piloting the questionnaire, but field protocols must also be piloted to ensure data collection runs as planned.

See J-PAL's questionnaire piloting checklist for a guide through the process.

Piloting phases and timelines

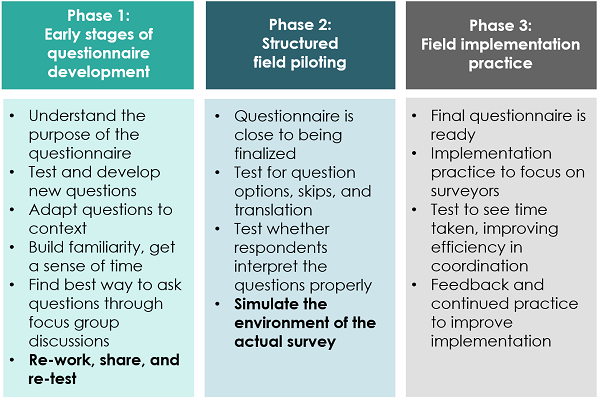

The piloting process begins once you have a first, rough draft of your questionnaire (see also resources on measurement and survey design) and ends when you have a final, translated questionnaire. Piloting is iterative but involves three main phases (J-PAL staff and affiliates: see J-PAL South Asia's presentation on piloting best practices for more information):

Brand new survey instruments will need to go through all three stages. Adaptations of well-designed questionnaires from a reliable source in the survey country may only need stages 2 and 3. Follow-up surveys with no major changes from the baseline may only need phase 3. Typically, the 3 stage piloting process should start 4-6 months before survey launch.

Phase 1: Early questionnaire development

This stage requires heavy involvement of the researchers and RA and is covered in greater detail in the survey design resource. You should end up with a draft paper-based questionnaire in both English and the local language(s).

Phase 2: Structured field piloting

This stage is predominantly carried out by senior field staff, with perhaps 1-2 additional enumerators, and should take place before enumerator training. At this point, you should have a full draft questionnaire and a good sense of the questions that need to be asked. The questionnaire should have been translated so that the version that will be used in the field is ready for pre-testing. The field team should be accompanied by research staff to ensure that any gaps in the instrument are systematically captured and addressed. Pen and paper pilots are recommended at this stage, even if the final survey will be digital, as it is easier to take notes and write suggestions on paper. See more at DIME's survey piloting guide.

Implementation of Phase 2 has three parts:

1. Pre-pilot preparation

- Where and who?

- Find a population and location similar to what you will use in the study, but do not use your actual study area

- If the real sample will include different sub-groups (e.g., men and women, more and less educated), include them in the pilot

- When? Allow time for the following:

- Obtaining approvals for piloting, such as from relevant national/local government authorities or local leaders. Field staff should be able to provide guidance on what local approval is needed.

- Submitting amendments to the IRB so you have IRB approval for the final version of the questionnaire.

- The original application can include language to the effect that small changes to the questionnaire may be made (to allow for smaller edits such as rephrasing) but that larger changes will be resubmitted.

- Editing the questionnaire and building field protocols based on the pilot in advance of staff training. This may take a couple of weeks, depending on the length, complexity, and degree to which the questions have already been piloted (e.g., for the baseline or a different survey).

- How?

- As with a full survey, you should have a field plan before launching your phase 2 pilot.

- Train key staff on the questionnaire and create a piloting checklist to provide feedback (see sample tracking and feedback sheets below). In general, it is better if this is on paper to allow for easy writing of notes. By the end, interviewers must be familiar with the instrument and the objectives of the pilot.

- Allow at least a day between training and the pilot for the field staff to offer post-training feedback on:

- Questions and answers, including translation, wording, ordering, suitability, completeness, missing options, skip patterns, and any additional clarifications needed (such as whether the respondent can interpret and answer the questions properly)

- Questionnaire length, formatting, structuring, and overall flow

- Choice of respondent

- Clarity and thoroughness of instructions

- Overall questionnaire feasibility (e.g., length, field realities that don’t fit with assumptions, permissions, availability of respondents, replacement strategies, etc.)

- Be sure that you have considered all logistics (e.g., stationary, printed questionnaires, transports, necessary permission, hiring of additional field staff, per diems, etc.). This will be on a smaller scale than with full implementation, but the same considerations apply. See the survey logistics section.

2. During piloting

- Each enumerator should go into the field with a form for recording piloting metadata (the timing of the survey, how long it took, and the type of respondent). This not only enables enumerators to track which surveys they have completed, but also to record who they spoke to, and any overall high-level thoughts on the survey. It functions as a guide for the nature of questions they should be thinking about and recording systematically. See the metadata tab of J-PAL's sample recording sheet (.xls direct download)

- The format of this survey should be different from a standard paper survey: it should explicitly ask about all relevant aspects of the question. An example is included in the sample recording sheet (.xls direct download), in the question-by-question tab.

- Ensure field staff read questions as written and do not make their own assumptions.

- Identify respondents who can answer the questions and then provide feedback on the questions and the process, i.e., someone with the time and energy to really engage.

- Conduct the pre-testing in pairs so that one person can engage with the respondent while the other observes and records.

- Take notes, especially whenever:

- A follow-up question is required

- A change of wording is required

- Allow time for piloting and iteration between the field team and researchers.

- RAs should observe pilot interviews even if they don’t speak the language, as they will be able to get valuable insights into how the survey is going by observing rapport, body language, pauses, etc.

3. Post-piloting feedback

- Conduct a detailed de-brief as soon as possible after the pilot, including reviewing question by question and asking feedback from everyone involved. Make sure someone is taking detailed notes.

- Revise the questionnaire as soon as possible after feedback. Be careful with version control, and be sure to label each version appropriately (use the date the version was completed rather than appending authors’ initials or similar)

- Systematically document notes from the piloting exercise for further discussion with the PIs. These documents also serve as a good source of information should the team need to revisit why a decision was made to ask a question one way over another. See example notes in table 1 below.

- Start with paper-based questionnaires, but be sure to also pilot the digital data collection method you will be using before moving on to Phase 3. See more on this below.

| Time taken | 30 minutes (same as baseline). Piloted by two supervisors; less experienced surveyors may take longer. |

| C7: Name and address of school | Able to match names with DISE code for half the kids and list possible matches for others. (REFER NEXT TAB) It may be helpful for surveyors to carry school list along with narrowing down possible matches by asking about full address, school mgmt, co-ed/boys/girls |

| C8/D11: Distance of school/tuition from home | Some parents able to reply in km (esp if school is close) but others gave estimates in minutes. But time estimate is "actual time taken" not "hypothetical time taken to walk to school/tuition." We could ask if child walks, cycles, takes rickshaw/bus, etc -- will need to ask this anyway for educational expenditure on transport. |

| C9/C10: Educational subsidies and expenditures | This question takes a long time esp if there are lots of kids in hh. School Fee: Remove Rs _ from C9 since hh is not receiving cash, only amount paid by them (in C10) is lower or zero if child is in govt school/EWS in pvt school. Textbooks/Stationary, Uniform: If child receives cash for both textbooks and uniform, hh can't distinguish between the two and can only report total amount. Also, respondents find it difficult to estimate own annual expenditure on these categories. Other: Do we include expenditure on extra-curricular activities, sports, NCC, etc. if they are organized by school? |

| E2: Rank factors important in tuition choice | Since there are too many factors, respondents can't rank them all. Question could be re-phrased to: -> how important is each of these factors? |

Phase 3: Full scale field implementation practice ("false launch")

You should have a full, final questionnaire programmed into the software that you intend to use for your full survey. The aims of this final phase are to:

- Test field protocols, including functionality of GPS devices and data transfer from device to server

- Train enumerators on the instrument and overall aim of the data collection exercise

- First, cover the instrument on paper so they focus on understanding the questions and then move to the digitized version

- Then have enumerators practice in pairs, do mock sessions etc.

- Practice implementing the survey (e.g., have surveyors conduct an interview, call the field manager to report an issue, send the data to the server, etc.). Focus on the enumerators and their experience conducting the interview

- Test how long the whole survey process takes

- Practice and improve survey efficiency (e.g., are there quicker ways of asking questions, or advancing through modules?)

- Practice and improve coordination between field staff (e.g., have enumerators call field monitors to ask for clarification on a question, etc.)

- Get feedback on how to improve implementation

See also the World Bank's survey guidelines and guidelines on field protocols to test, such as when respondents are available, infrastructure (e.g., electricity and internet access), sampling protocols and replacement strategy, and collecting geo-data.

Digital vs. paper data collection

Digital data collection

During digital data collection piloting you should additionally:

- Ensure that the digital device is working and check how long the battery of the device lasts

- Train the pre-test staff members on your chosen method digital data collection

- Ensure the interface is working

- Ensure the software is working and bug free; any malfunctions should be recorded and reported

- Test data validations and constraints

- Test skip patterns

This is typically done through 3 different test types:

- Bench testing: In-office testing of the survey, typically involving 3-6 enumerators

- Device testing: Thorough testing of devices, including in extreme scenarios

- Data flow testing: Check the flow of data from exporting out of the device to cleaning and merging.

- This is generally done in conjunction with bench testing

- Be sure to examine your dataset in Stata/R (see the data quality checks resource for more)

More information can be found in the survey programming resource. If you are using a survey firm, they should ideally participate in this phase--see more in Working with a third-party survey firm.

Paper data collection

Although most data collection is now done digitally, there may be occasions when you have to use paper surveys. If this is the case make sure:

- Instructions to enumerators are clear, since you can’t pre-program skip patterns or logic checks

- You have piloted your final paper questionnaire extensively in the field

- You test your data entry software in advance of the full launch to look out for missing data links, or necessary revisions to data collection templates

- Your data pilot includes a careful analysis of entered data to ensure that skip patterns, etc., are being understood correctly

Last updated June 2023.

These resources are a collaborative effort. If you notice a bug or have a suggestion for additional content, please fill out this form.

We thank James Turitto and Jack Cavanagh for helpful comments. Any errors are our own.

Additional Resources

J-PAL South Asia's Piloting: Best Practices lecture, delivered in their 2019 Measurement & Survey Design Course (J-PAL internal resource)

World Bank DIME Analytics. Survey guidelines.

World Bank DIME Wiki. Piloting survey protocols.

World Banke DIME Wiki. Survey Pilot.