Understanding the hidden factors influencing child marriage: Lessons from an impact evaluation

Designing intake and consent processes in health care contexts

Overview

When conducting randomized evaluations of interventions with human subjects, researchers have to consider how to design intake and consent processes for study participants. Researchers must ensure that participants are well informed about a study in order for them to give informed consent. This part of the enrollment process will ultimately determine the study sample composition. Health care interventions present additional challenges compared to other human subject research areas, given the complexities of the patient-health professional relationship, concerns about disruptions to engagement in care, and the vulnerable position patients are potentially in, particularly if enrolled while receiving or seeking care.

This resource details challenges in health care contexts when designing the intake and consent process and highlights how challenges were addressed in two studies conducted by J-PAL North America: Health Care Hotspotting in the United States and the Impact of a Nurse Home Visiting Program on Maternal and Early Childhood Outcomes in the United States. These case studies show the importance of communicating with implementing partners, deciding how to approach program participants, supporting enrollment, providing training, and creating context-specific solutions. For a general overview of the intake and consent process beyond the health care context please see the resource on defining intake and consent processes.

Health Care Hotspotting

Description of the study

The Camden Coalition of Healthcare Providers and J-PAL affiliated researchers collaborated on a randomized evaluation of the Camden Core Model, a care management program serving “super-utilizers,” individuals with very high use of the health care system and with complex medical and social needs, who account for a disproportionately large share of health care costs. This program provides intensive, time-limited clinical and social assistance to patients in the months after hospital discharge with the goal of improving health and reducing hospital use among some of the least healthy and most vulnerable adults. Assistance includes coordinating follow-up care, managing medication, connecting patients to social services, and coaching on disease-specific self care. The study was conducted to evaluate the ability of the Camden Core Model program to reduce future hospitalizations amongst super-utilizers compared to usual care.

Patients were enrolled in the study while hospitalized. Prior to enrollment, patients were first identified as potentially eligible by the Camden Coalition’s triage team, which reviewed electronic medical records of admitted patients daily to see if they met inclusion criteria. Camden Coalition recruiters then approached potentially-eligible patients at the bedside, confirmed eligibility, administered the informed consent process and a baseline survey, and revealed random assignment. Potential participants were given a paper copy of the consent form, which was available in English and Spanish, and were given time to ask questions after the information had been provided to them. Enrollment ran from June 2, 2014 through September 13, 2017.

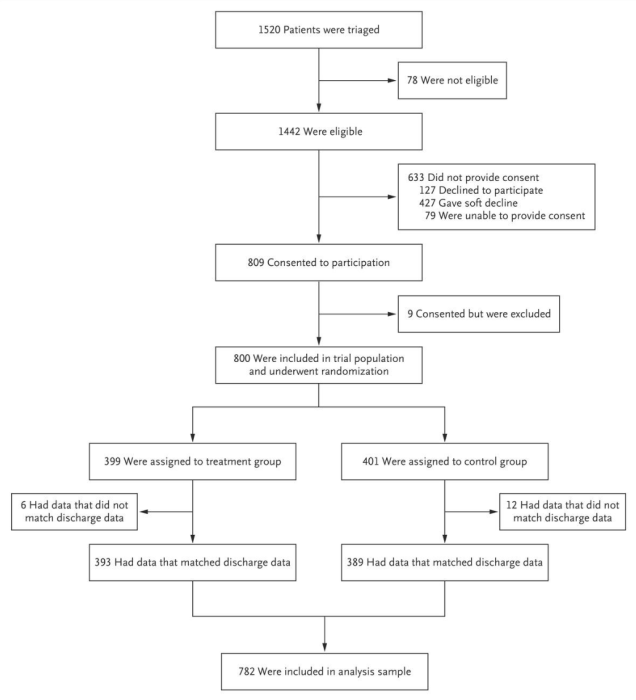

Enrollment at a glance:

- Enrollment period: 3.3 years (June 2, 2014 to September 13, 2017)

- Sample size: 800 hospitalized patients with medically and socially complex conditions, all with at least one additional hospitalization in the preceding 6 months

- Subjects contacted: 1,442 eligible patients were identified, many of whom declined to participate or could not be reached prior to discharge

- Who enrolled: Camden Coalition recruitment staff (distinct from direct providers of health care and social services)

- Where enrolled: Hospital, while subject was admitted

- When enrolled: Enrolled prior to random assignment; randomization revealed immediately after

- Level of risk: Minimal risk

- Enrollment compensation: $20 gift card

Which activities required consent?

Participants provided informed consent for study participation, for researchers to access data, and to receive the intervention if assigned to the intervention. The consent process included informing participants about the randomized study design, the details of the Camden Core Model program and the care they would receive, and how their data would be used. Participants were only able to receive services from the Camden Coalition if they agreed to be part of the study, but they were informed about their rights to seek alternative care regardless of randomization.

The consent process focused on consent to permit the use and disclosure of health care data, known as protected health information (PHI). As explained in the resource on data access under HIPAA, accessing PHI for research often requires individual authorization from patients, and individual authorization can smooth negotiations with data providers even when not required. The process for individual authorization under HIPAA can be combined with informed consent for research. In this case, the study relied on administrative data from several sources: hospital discharge data provided by Camden-area hospitals and the state of New Jersey, Medicaid claims data, social service data provided by the state, and mortality data provided by the federal government. The risk of accidental data disclosure comprised the study’s primary risk.

The consent process provided details so patients could make informed decisions about data disclosure. Patients were informed that the researchers would collect identifiers (name, address, social security number, and medical ID numbers) and use them to link participants to administrative data. Patients were informed about the types of outcomes considered, the types of data sources, the legal environment and HIPAA protections afforded to participants, and how data would be stored and protected. Plans for using administrative data were not all finalized at the time of enrollment so language was kept broad to allow for linkages to additional datasets. The consent form also made clear that the data existed whether patients participated in the study or not; consent was sought so that researchers could access the existing data to measure the impact of the Camden Core Model.

In addition to informed consent for health data disclosure, researchers also obtained informed consent for participating in the study and receiving the intervention. Participants were informed about the parameters of the study (including the goals of the research, that participation is voluntary and does not affect treatment at the hospital, and the probability of selection for receiving the intervention) as well as details of the program (including the composition of the care team and the goals and activities of the program, such as conducting home visits and scheduling and accompanying patients to medical appointments). Because the intervention was not created by the researchers, was unchanged for the purposes of the study, and came with no additional risks beyond the risk of data disclosure, the bulk of the consent process focused on data. Participants selected into the intervention group filled out an additional consent form in order to receive the Camden Core Model program.

A waiver of informed consent was not pursued by the research team, despite the focus on secondary data, which may have qualified the study for a waiver. Waivers are not allowed when it is practicable to seek consent. There was a previously existing in-person intake process for Camden Core Model program participants, so seeking consent was practicable, and therefore sought by the research team.

Seeking informed consent also generated additional benefits. It gave all potential participants the opportunity to understand the research study and make an informed decision about participation. Consenting prior to randomization also improved statistical power, because those who declined to participate were excluded from the study entirely rather than diluting an intervention effect by lowering take up. As noted, informed consent may have also helped researchers gain access to data even if a HIPAA waiver or a DUA (in the case of limited data) may have been technically acceptable.

Who was asked for consent?

All participants, in both intervention and comparison groups, were required to give consent to participate in the study. Initial eligibility checks performed by examining electronic medical records guaranteed that all potential participants approached by recruiters met most inclusion criteria. The broader triage population whose records were examined did not give informed consent because this process was already part of program implementation and was not unique to the study.

If potential participants were cognitively impaired, did not meet eligibility requirements, or could not communicate with the recruiter when being approached to inform them about the program and the study, the individuals were deemed not eligible to participate and the consent process did not take place.

The study included vulnerable participants who were chronically ill, currently hospitalized, and likely economically disadvantaged. Beyond the target population for the intervention however, specific vulnerable groups governed by human subjects regulations (such as pregnant women, children, or prisoners) were either explicitly excluded (in the case of children) or recruited only incidentally.

How was program enrollment modified for the study?

The Camden Core Model was already in place before the study started. Before the study took place, Camden Coalition staff identified eligible patients using real time data from their Health Information Exchange (HIE) — a database which covers four Camden hospital systems — and then approached patients while in the hospital to explain the program and to invite them to enroll. Because of program capacity constraints, only a small fraction of the eligible population could be offered the program, and participant prioritization was ad hoc prior to the beginning of the study.

The study was designed with similar inclusion and exclusion criteria and a similar triage and bedside enrollment process as the one the program had in place. For randomization, researchers created a randomization list with study IDs and random assignments prior to enrollment. Triage staff, who identified eligible patients using the HIE, assigned a study ID to potential participants without knowing intervention and comparison assignments. Informed consent and the baseline survey took place at the bedside, and recruiters revealed random assignments at the end of the enrollment process. The Camden Coalition hired additional full time recruiters to meet the scale of the study and strictly separated functions of their data team, triage staff, and recruiters in order to preserve the integrity of random assignment.

When did random assignment occur and why?

Recruiters revealed random assignments immediately after the informed consent process and the baseline survey were completed. Randomizing after consent was extremely important for two distinct reasons: bias and power. Randomizing after consent ensured that participation rates were balanced between intervention and comparison groups; this helped to prevent bias because individuals could not self-select into the study at different rates based on intervention assignment. Had the study team randomized prior to seeking consent, some individuals offered the program may have declined participation in the study, lowering take-up and reducing statistical power. Randomizing after consent also greatly increased power since researchers were able to exclude those who declined to consent from the study entirely, resulting in increased take up. This approach, however, places burdens on enrollment staff who must inform participants when they are assigned to the comparison group that they cannot access the intervention. As a result, it is important for researchers to discuss these issues early with the implementing partner and brainstorm whether there are ways to conduct randomization after recruitment in a manner that the partner is comfortable with.

Who conducted enrollment?

The study team used enrollment specialists employed by Camden Coalition who, while separate from other program staff, were sensitive to participant concerns about research participation. Some specialists were newly hired while others were redeployed from other positions within the Camden Coalition. As much as possible, the Camden Coalition hired specialists from the community where the study took place to allow for greater cultural and demographic alignment with participants. To ensure staffing across the full study period, however, they were flexible in this criterion.

Enrollment specialists approached potential participants to describe the program and seek consent prior to random assignment. Recruiters were bilingual, to communicate with patients in either English or Spanish. They were trained to approach eligible patients in a timely manner and in a standardized way following study protocols. They were also trained to introduce themselves and ask the patient questions to assess how they were feeling prior to talking about the intervention, to assess whether patients had the cognitive capacity to listen to and understand the information being provided, and to give consent. Camden Coalition staff led the development of this training, building off how enrollment was conducted prior to the RCT.

Some patients were wary of participating in research in general, and both patients and recruitment specialists often felt discouraged when patients were assigned to the comparison group. By limiting enrollment to a small number of recruitment specialists, researchers and the Camden Coalition were able to provide specialized support and training to the recruitment specialists. The Camden Coalition provided staff funding for therapy to support their mental health. The recruitment specialists also supported each other and developed best practices, including language to introduce the study without promising service, methods of preventing undue influence, and ways to support disappointed patients. To help maintain momentum and morale throughout the study period, the team celebrated enrollment milestones with a larger group of Camden Coalition staff, which helped to illustrate that enrollment was a part of a broader organizational goal.

How were risks and benefits described?

The IRB deemed this study minimal risk. The only risk highlighted during enrollment was the loss or misuse of protected health information. The study team noted that this risk existed for both intervention and comparison groups and that they were taking steps to mitigate it. Benefits were similarly modest, and as noted above, recruiters made sure to avoid over-promising potential benefits to participants. The benefits included potential useful information derived from the results of the study, and receipt of the program (which was described as potentially improving interactions with health care and social service systems). Although not considered a research best practice, the $20 compensation for completing the survey was also listed as a benefit.

How were participants compensated?

Participants who completed the baseline survey (administered after consent and prior to randomization) were given a $20 gift card for their time. Recruiters informed participants that the survey would take approximately 30 minutes.

Randomized Evaluation of the Nurse-Family Partnership

Description of the study

J-PAL affiliated researchers partnered with the South Carolina Department of Health and Human Services (DHHS), other research collaborators, and other partners to conduct a randomized evaluation of the Nurse-Family Partnership (NFP) program. This program pairs low-income first-time mothers with a personal nurse who provides at-home visits from early pregnancy through the child’s second birthday. The goal of the program is to support families during the transition to parenthood and through childrens’ early childhood to improve their health and wellbeing. The program includes up to 40 home visits (15 prenatal visits, 8 postpartum visits up to 60 days after delivery, and 17 visits during the child’s first two years), with services available in Spanish and English. An expansion of the program in South Carolina to nearly double its reach presented an opportunity to evaluate the program and measure its impact on adverse birth outcomes like low birth weight, child development, maternal life changes through family planning, and other outcomes, further described in the study protocol.

Different channels were used to identify potential participants, including direct referral through local health care providers, schools, and Special Supplemental Nutrition Program for Women, Infants, and Children (WIC) agencies; direct referrals from the Medicaid eligibility database to NFP; referrals by friends or family members; knowledge of the program through digital and printed advertisement; or identification of participants by the outreach team hired for the study period for this purpose. After identification, NFP nurses who were also direct service providers visited the potential participants at their homes or private location of their choice, assessed their eligibility, and if eligible, conducted the informed consent process. After obtaining informed consent, the nurses administered the baseline survey, after which the participant was compensated with a $25 gift card for their time, and received a referral list to other programs and services in the area. Immediately after, participants were randomized into the intervention or comparison group using the survey software SurveyCTO. Two-thirds of participants were randomly allocated to the intervention group and one-third to the comparison group. Enrollment ran from April 1, 2016 to March 17, 2020.

Enrollment at a glance:

- Enrollment period: 4 years (April 1, 2016 to March 17, 2020)

- Sample size: 5,655 Medicaid-eligible, nulliparous pregnant individuals at less than 28 weeks’ gestation

- Subjects contacted: 12,189 eligible and invited to participate

- Who enrolled: Nurse home visitors (direct service providers)

- Where enrolled: Participant’s home or location of preference

- When enrolled: Enrolled prior to random assignment; on the spot randomization

- Level of risk: Minimal risk

- Enrollment compensation: $25 gift card

Which activities required consent?

Similar to Health Care Hotspotting, the NFP study protocol required consent from participants to be part of the evaluation, as well as consent to use their personal identifiable information to link to administrative data for up to 30 years. These data included Medicaid claims data, hospital discharge records, and vital statistics, as well as a broad range of linked data covering social services, education, mental health, criminal justice, and more. This data allowed the researchers to gather information on the health and well-being of mothers and children. For those assigned to the intervention group, program participation involved a separate consent process.

Who was asked for consent?

All participants, in both intervention and comparison groups, were required to give consent to participate in the study. Potential participants were first-time pregnant people who were less than 28 weeks’ gestation, were income-eligible for Medicaid during their pregnancy, older than 15, and lived in a catchment area served by NFP nurses. Participants consented for themselves and for their children, as stated in the informed consent process.

The NFP program focused on enrolling people in early pregnancy to ensure the home visits included the prenatal period. People were excluded from the study if they were incarcerated or living in a lockdown facility, or under the age of 15. Program services were provided in English, Spanish, and additional translation services were available for participants who spoke other languages. To be eligible for the study, people also needed to have enough language fluency that they would be able to benefit therapeutically from the program.

To identify children born during the study, researchers probabilistically matched mothers to births recorded in vital records, using social security number, birth date, name, and Medicaid ID.

How was program enrollment modified for the study?

NFP had a decentralized method of identifying participants for their program prior to the study. Once patients were identified, nurses would enroll them as part of the first home visit. Under this system, the program served about 600 women per year.

Alongside the randomized evaluation, the NFP program was scaled up to serve an average of 1,200 people per year, compared to 600 people served prior to the study. Extra personnel were hired to conduct outreach to new eligible participants.

Although the randomized evaluation coincided with an expansion of the program and the hiring of new staff, including an outreach team, NFP leadership requested that the nurse home visitors who make home visits be the ones conducting study enrollment. Therefore, nurses provided on-the-spot randomization and informed participants about their study group. NFP and local implementing partners believed their nurses were better equipped to work with the study population rather than external surveyors, as nurses who deliver the program are well-trained in working with low-income, first-time mothers from the local communities. They also believed that shifting the recruitment model to a centralized process with enrollment specialists was infeasible given the scale of the study and the program.

The study’s informed consent process was incorporated into the pre-existing program recruitment and consent process, therefore participants received both program and study information at the same time. This allowed the study team to ensure participants also received clear information about the program.

When did random assignment occur and why?

Random assignment was conducted on-the-spot, after participants consented to the study and completed the baseline survey. The survey software, SurveyCTO, automatically assigned participants to either an intervention or comparison group. Two-thirds of the sample was randomly allocated to receive the treatment intervention and one-third to the comparison group.

As in Health Care Hotspotting, consent prior to randomization guaranteed that there was balance between intervention and comparison groups as both would have received the same information and would be equally likely to consent to be part of the study. On-the-spot randomization also maximized statistical power, as only those who consented to participate were randomized, instead of randomizing and then approaching participants — who may decline to participate. Although withdrawal after randomization could still occur, for those in the intervention group, nurses were able to immediately conduct the first home visit, so this strategy helped improve take-up of the intervention.

Who conducted enrollment?

Nurse home visitors conducted enrollment and randomization with support from a recruitment support specialist (a research associate) at J-PAL North America. This involved investment from the research team to dedicate sufficient staff capacity to train and support nurses during the four years in which enrollment took place. The research associate was responsible for training nurses on study protocols, providing remote and in-person field support, monitoring survey recordings for quality and compliance checks, managing gift cards and study tablets, and maintaining morale and building relationships with nurses.

Nurses invested in learning how to be survey enumerators in addition to delivering high-quality nursing care. The research team trained all nurses on how to recruit, assess eligibility, deliver informed consent, randomize patients into intervention and comparison groups, and deliver the baseline survey using SurveyCTO software on a tablet. All nurses had to complete this training before they could enroll patients. The research team went to South Carolina before the start of the study and conducted a two-day in-person training to practice obtaining informed consent and using tablets to administer the baseline survey. Yearly refresher trainings were offered.

Communicating comparison group assignment was one of the main challenges for nurses during study enrollment. Nurses navigated this challenge by handing patients the tablet and having them press the randomization button, which helped nurses and patients to remember that assignment to a group was automatically generated and out of the control of both parties.

The research team provided additional resources to mitigate the concern that nurses may not adhere to random assignments. The research team conducted quarterly in-person enrollment and field trainings for nurses and nurse supervisors that highlighted benefits for comparison group participants, including that all comparison group participants benefit from meeting with a caring health care professional who can help with Medicaid enrollment if needed and receive a list of other available services.They also reminded nurses that the evaluation helped to expand services to more patients than would otherwise be served.

The research team’s recruitment support specialist operated a phone line that nurses could call for emotional and technical support, coordinated in-person and web-based training for new nurses, sent encouragement to the nurses, and monitored fidelity to the evaluation design through conducting audio checks on the delivery of informed consent and baseline survey. The support specialist also troubleshooted any issues with the tablet in real time and helped to resolve any data input errors...

How were risks and benefits described?

The primary risk of participating in the study was the loss or misuse of protected health information. The benefits included potentially useful information derived from the results of the study, and receipt of the intervention, which could improve the health and wellbeing of participants and their child.

How were participants compensated?

After completing the baseline survey, all study participants were compensated with a $25 Visa gift card for the time it took them to complete the survey.

Acknowledgements: Thanks to Amy Finkelstein, Catherine Darrow, Jesse Gubb, Margaret McConnell, and Noreen Giga for their thoughtful contributions. Amanda Buechele copy-edited this document. Creation of this resource was supported by the National Institute On Aging of the National Institutes of Health under Award Number P30AG064190. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

J-PAL’s Research Resources

J-PAL’s Research Resources provide additional information on topics discussed in this resource, including the Regular intake and consent research resource.

Health Care Hotspotting

- Evaluation summary

- Paper

- Protocol

- Consent forms

Nurse Family Partnership

- Evaluation summary

- Paper

- Protocol

- Consent form can be found in the Protocol, Additional File 3

Camden Coalition of Healthcare Providers. “Camden Core Model.” Camden Coalition of Healthcare Providers. (December 2, 2021). Accessed February 6, 2023. https://camdenhealth.org/care-interventions/camden-core-model/.

Camden Coalition of Healthcare Providers. “The Camden Core Model – Patient Selection and Triage Methodology.” Camden Coalition of Healthcare Providers. Accessed February 6, 2023. https://camdenhealth.org/wp-content/uploads/2019/11/Care-Management_Triage_11022018_v5.pdf.

Finkelstein, Amy, Annetta Zhou, Sarah Taubman, and Joseph Doyle. “Health Care Hotspotting — a Randomized, Controlled Trial.” New England Journal of Medicine 382, no. 2 (2020): 152–62. https://doi.org/10.1056/nejmsa1906848.

Finkelstein, Amy, Annetta Zhou, Sarah Taubman, and Joseph Doyle. “Supplementary Appendix. Healthcare Hotspotting – A Randomized Controlled Trial.” New England Journal of Medicine 382, no. 2 (2020): 152–62. https://www.nejm.org/doi/suppl/10.1056/NEJMsa1906848/suppl_file/nejmsa1906848_appendix.pdf.

Finkelstein, Amy, Annetta Zhou, Sarah Taubman, and Joseph Doyle. “Supplementary Appendix. Healthcare Hotspotting – A Randomized Controlled Trial.” New England Journal of Medicine 382, no. 2 (2020): 152–62. https://www.nejm.org/doi/suppl/10.1056/NEJMsa1906848/suppl_file/nejmsa1906848_protocol.pdf.

Harvard T.H. Chan School of Public Health. “Partners.” South Carolina Nurse-Family Partnership Study Website. Accessed February 6, 2023. https://www.hsph.harvard.edu/sc-nfp-study/partners/.

Harvard T.H. Chan School of Public Health. “Pay for Success.” South Carolina Nurse-Family Partnership Study, July 1, 2022. https://www.hsph.harvard.edu/sc-nfp-study/about-the-study-about-the-study/pay-for-success/.

Harvard T.H. Chan School of Public Health. “People.” South Carolina Nurse-Family Partnership Study Website. Accessed February 6, 2023. https://www.hsph.harvard.edu/sc-nfp-study/people/.

McConnell, Margaret A., Slawa Rokicki, Samuel Ayers, Farah Allouch, Nicolas Perreault, Rebecca A. Gourevitch, Michelle W. Martin, et al. “Effect of an Intensive Nurse Home Visiting Program on Adverse Birth Outcomes in a Medicaid-Eligible Population.” JAMA 328, no. 1 (2022): 27. https://doi.org/10.1001/jama.2022.9703

McConnell, Margaret A., R. Annetta Zhou, Michelle W. Martin, Rebecca A. Gourevitch, Maria Steenland, Mary Ann Bates, Chloe Zera, Michele Hacker, Alyna Chien, and Katherine Baicker. “Protocol for a Randomized Controlled Trial Evaluating the Impact of the Nurse-Family Partnership’s Home Visiting Program in South Carolina on Maternal and Child Health Outcomes.” Trials 21, no. 1 (2020). https://doi.org/10.1186/s13063-020-04916-9.

The Abdul Latif Jameel Poverty Action Lab. “Health Care Hotspotting in the United States.” The Abdul Latif Jameel Poverty Action Lab (J-PAL). Accessed February 6, 2023. https://www.povertyactionlab.org/evaluation/health-care-hotspotting-united-states.

The Abdul Latif Jameel Poverty Action Lab. “The impact of a nurse home visiting program on maternal and early childhood outcomes in the United States.” The Abdul Latif Jameel Poverty Action Lab (J-PAL). Accessed December 22, 2022, from https://www.povertyactionlab.org/evaluation/impact-nurse-home-visiting-program-maternal-and-early-childhood-outcomes-united-states.

South Carolina Healthy Connections. “Fact Sheet: South Carolina Nurse -Family Partnership Pay for Success Project.” Accessed February 6, 2023. https://socialfinance.org/wp-content/uploads/2016/02/021616-SC-NFP-PFS-Fact-Sheet_vFINAL.pdf.

Lessons for assessing power and feasibility from studies of health care delivery

Introduction

This resource highlights key lessons for designing well-powered randomized evaluations, based on evidence from health care delivery studies, funded and implemented by J-PAL North America.1 Determining whether a study is sufficiently powered to detect effects is an important decision to make at the outset of a project, to determine whether a project is worth pursuing. There are dangers to underpowered studies; for example, if the lack of a statistically significant result is interpreted as evidence that a program is ineffective, rather than underpowered. Although designing well-powered studies is critical in all domains, health care delivery presents particular challenges — direct effects on health outcomes are often difficult to measure and for whom an intervention is effective is a particularly important question because differential effects can have a dramatic impact in the type of care received. Health care delivery settings also present opportunities, in that implementing partners have important complementary expertise to address these challenges.

Key takeaways

- Communicate with partners to:

- Choose outcomes that align with the program’s theory of change

- Gather data for power calculations

- Select meaningful minimum detectable effect sizes

- Assess whether a study is worth pursuing

- Anticipate challenges when measuring health outcomes:

- Plan to collect primary data from sufficiently large samples

- Choose health outcomes that can be impacted during the study period and consider prevalence in the sample

- Consider whether results, particularly null results, will be informative

- Think carefully about subgroup analysis and heterogeneous effects:

- Set conservative expectations about subgroup comparisons, which may be underpowered or yield false positives

- Calculate power for the smallest comparison

- Stratify to improve power

- Choose subgroups based on theory

The value of talking with partners early (and often)

Power calculations should be conducted as early as possible. Starting conversations about power calculations with implementing partners early not only provides insight on the feasibility of the study, but also allows a partner to be involved in the research process and offer necessary inputs like data. Estimating power is fundamental to help researchers and implementing partners understand the other's perspective and create a common understanding of the possibilities and constraints of a research project. Implementing partners can be incredibly useful in making key design decisions that affect power.

Decide what outcomes to measure based on theory of change

An intervention may affect many aspects of health and its impact could be measured by many outcomes. Researchers will need to decide what outcomes to prioritize to ensure adequate power and a feasible study. Determining what outcomes to prioritize collaboratively with implementing partners helps ensure that outcomes are theory-driven and decision-relevant while maximizing statistical power.

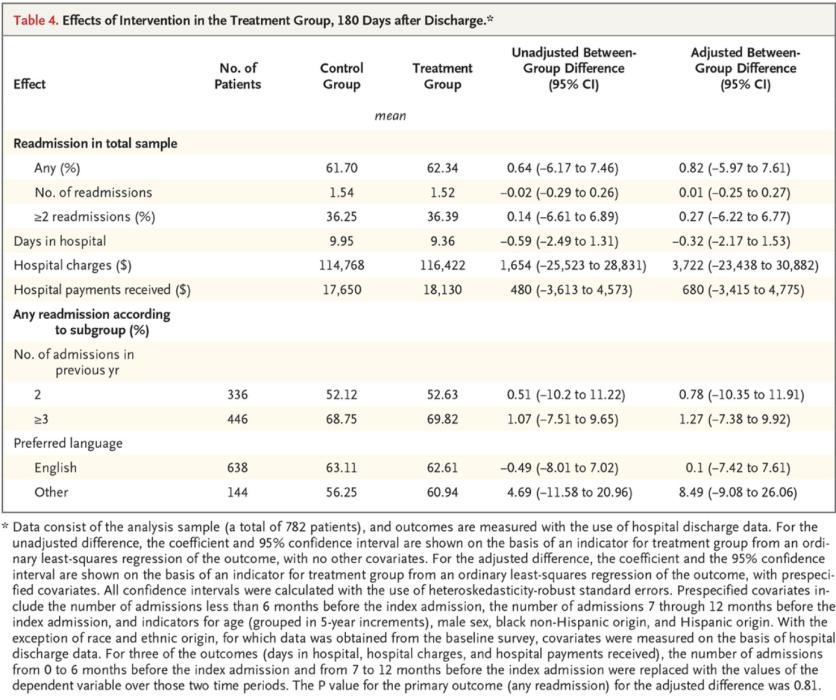

- In Health Care Hotspotting, an evaluation of the Camden Coalition of Healthcare Providers’ care coordination program for high cost, high need patients, researchers and the Camden Coalition chose hospital readmission rates as their primary outcome. This was not straightforward — the program may have had additional benefits in other domains — but this outcome aligned with the program’s theory of change, was measurable in administrative data, and ensured adequate power despite the limitations imposed by a relatively small sample. Although there was also interest in measuring whether the intervention could reduce hospital spending, this outcome was not chosen as a primary outcome2 because there was not sufficient power and because cost containment was less central to the program’s mission.

Provide data and understand constraints

While it is possible to conduct power calculations without data by making simplifying assumptions, partners can provide invaluable information for initial power calculations. Data from partners, including historical program data and statistics, the maximum available sample size (based on their understanding of the target population), and take-up rates gleaned from existing program implementation, can be used to estimate power within the context of a study. Program data may be preferable to data drawn from national surveys or other sources because it comes from the context in which an evaluation is likely to operate. However, previous participants may differ from study participants, so researchers should always assess sensitivity to assumptions made in power calculations. (See this resource for more information on testing sensitivity to assumptions).

- A randomized evaluation of Geisinger Health’s Fresh Food Farmacy program for patients with diabetes used statistics from current clients as baseline data for power calculations in their pre-analysis plan. This allowed researchers to use baseline values of the outcomes (HbA1c, weight, hospital visits) that were more likely to be reflective of the population participating in the study. It also allowed researchers to investigate how controlling for lagged outcomes would improve power. Without collaboration from implementing partners, these measures would be hard to approximate from other sources.

- In Health Care Hotspotting, the Camden Coalition provided historical electronic medical record (EMR) data, which gave researchers inputs for the control mean and standard deviation necessary to calculate power for hospital readmissions. The actual study population, however, had twice the assumed readmission rate (60% instead of 30%), which led to a slight decrease in power relative to expectations. This experience emphasizes the importance of assessing sensitivity.

Determine reasonable effect sizes

Choosing reasonable effect sizes is a critical component of calculating power and often a challenge. In addition to consulting academic literature, conversations with partners can help determine what level of precision will be decision-relevant. The minimum detectable effect (MDE) required for an evaluation should be determined in that particular context, where the partner’s own cost-benefit assessment or a more standardized benchmark may guide discussions. The risks of interpreting underpowered evaluations often fall on the implementing partner, where lack of power increases the likelihood that an intervention is wrongly seen as ineffective, so choosing a minimum detectable effect should be a joint decision between the research team and partner.

- In Health Care Hotspotting, researchers were powered to detect a 9.6 percentage point effect on the primary outcome of hospital readmissions. While smaller effects were potentially clinically meaningful, the research team and their partners determined that this effect size would be meaningful because it would rule out the much larger 15-45% reductions in readmissions found in previous evaluations of similar programs delivered to a different population (Finkelstein et al. 2020).

- In Fresh Food Farmacy, researchers produced target minimum detectable effects (see Table 2) in collaboration with their implementing partners and demonstrated that the study sample size would allow them to detect effects below these thresholds.

- Implementing partner judgment is critical when deciding to pursue an evaluation that can only measure large effects. Researchers proceeded with an evaluation of legal assistance for preventing evictions, which compared an intensive legal representation program to a limited, much less expensive, and more scalable program, based on an understanding that the full representation program would only be valuable if it could produce larger effects than the limited program. Estimating potentially small effects with precision was not of interest, given the cost of the program and the immense, unmet need for services.

In the section "Calculate minimum detectable effects and consider prevalence of relevant conditions" below, we further cover how special considerations such as prevalence of a condition have to also be considered when determining reasonable effect sizes.

Assess feasibility and make contingency plans

Early conversations about power calculations can help set clear expectations on parameters for study feasibility and define protocols for addressing future implementation challenges. Sometimes early conversations lead to a decision not to pursue a study. Talking points for non-technical conversations about power are included in this resource.

- During a long-term, large-scale evaluation of the Nurse Family Partnership in South Carolina, early power conversations proved to be helpful when making decisions in response to new constraints. Initially the study had hoped to enroll 6,000 women over a period of four years, but recruitment for the study was cut short due to Covid-19. Instead, the study enrolled 5,655 women, 94% of what was originally targeted. Since the initial power calculations anticipated a 95% participation rate and used conservative assumptions, the decision to stop enrollment due to the pandemic was made without concern that it would jeopardize power for the evaluation. Given the long timeline of the study and turnover in personnel, it was important to revisit conversations about power and involve everyone in the decision to halt enrollment.

- A recent study measuring the impact of online social support on nurse burnout was halted due to lack of power to measure outcomes. In the proposed study design, the main outcome was staff turnover. Given a sample of approximately 25,000 nurses, power calculations estimated a minimum detectable effect size of 1.5 percentage points, which represents a 8 to 9 percentage point reduction in staff turnover. When planning for implementation of the study, however, randomization was only possible at the nursing unit level. Given a new sample of approximately 800 nursing units, power calculations estimated a minimum detectable effect size of 7 to 10 percentage points, which represents roughly a 50 percent reduction in turnover. These new power calculations assumed complete take-up, which did not seem feasible to the research team. With the partner’s support, other outcomes of interest were explored, but these had low rates of prevalence in administrative data. Early conversations about study power surfaced constraints early, which prevented embarking on an infeasible research study.

Challenges of measuring health outcomes

Data collection and required sample sizes are two fundamental challenges to studying impacts on health outcomes. Health outcomes, other than mortality, are rarely available in administrative data, or if they are, may be differentially measured for treatment and control groups.3 For example, consider researchers who wish to measure blood pressure but rely on measurements taken at medical appointments. Only those who choose to go to the doctor appear in the data. If the intervention increases appointments attended, the treatment group will be more prevalent in the data in comparison to the control group, further biasing the result. Therefore, measuring health outcomes usually requires researchers to conduct costly primary health data collection to avoid bias resulting from differential coverage in administrative data.4

Required sample sizes also pose challenges to research teams studying health care interventions. Because many factors influence health, health care delivery interventions may be presumed to have small effects. This requires large sample sizes or lengthy follow-up periods. Additionally, health impacts are likely to be downstream of health care delivery or behavioral interventions that may have limited take up, further reducing power.5 As a result of these challenges, many evaluations of health care delivery interventions measure inputs into health — for example, receiving preventive services like a flu shot (Alsan, Garrick, and Graziani 2019) or medication adherence — instead of, or in addition to, measuring downstream health outcomes. These health inputs can often be measured in administrative data and directly attributed to the intervention.

Despite these challenges, researchers may wish to demonstrate actual health impacts of an intervention when feasible. This section introduces key steps in the process of designing a health outcome measurement strategy illustrated by two cases, the Oregon Health Insurance Experiment (OHIE) and a study examining a workplace wellness program. These cases highlight thoughtful approaches and challenges to estimating precise effects and include examples for interpreting null results.

Choose measurable outcomes plausibly affected by the intervention in the time frame allotted

Not all aspects of health will be expected to change as a result of a particular intervention, especially within a study timeframe. For instance, preventing major cardiovascular events like heart attacks may be the goal of an intervention, but it is more feasible to measure blood pressure and cholesterol, both of which are risk factors for heart attacks, than to measure heart attacks, which might not occur for years or at all. What to measure should be determined by a program’s theory of change, an understanding of the study population, and the length of follow up.

- In the OHIE, researchers measured the effects of Medicaid6 on hypertension, high cholesterol, diabetes, and depression. These outcomes were chosen because they were “important contributors to morbidity and mortality, feasible to measure, prevalent in the low-income population of the study, and plausibly modifiable by effective treatment within two years” (Baicker et al, 2013). For insurance to lead to observable improvements in these outcomes, receiving Medicaid would need to increase health care utilization, lead to accurate diagnoses, and generate effective treatment plans that are followed by patients. Any “slippage” in this theory of change, such as not taking prescribed medication, would limit the ability to observe effects. Diabetes, for example, was not as prevalent as expected in the sample, reducing power relative to initial estimates.

- In an evaluation of a workplace wellness program, researchers measured effects of a wellness program on cholesterol, blood glucose, blood pressure, and BMI, because they were all elements of health plausibly improved by the wellness program within the study period.

Make sample size decisions and a plan to collect data.

Measuring health outcomes will likely require researchers to collect data themselves, in person, with the help of medical professionals. In this situation, data collection logistics and costs will limit sample size. The costs of data collection must be balanced with sample sizes needed to draw informative conclusions. In each study, researchers restricted their data collection efforts in terms of geography and sample size. In addition, clinical measurements were relatively simple, involving survey questions, blood tests7, and easily portable instruments. Both of these strategies addressed cost and logistical constraints.

- In the OHIE, clinical measures were only collected in the Portland area, with 20,745 people receiving health screenings, despite the intervention being statewide with a study sample size of over 80,000.

- In workplace wellness, researchers collected clinical data from all 20 treatment sites but only 20 of the available 140 control sites in the study.

Calculate minimum detectable effects and consider prevalence of relevant conditions

In the health care context, researchers should also consider the prevalence of individuals who are at risk for a health outcome and for whom we can expect the intervention to potentially address that outcome. In other words, researchers must understand the number of potential compliers with that aspect of treatment, where a compiler receives that element of the intervention only when assigned to treatment, in comparison to other participants who may always or never receive the intervention.

Interventions like health insurance or workplace wellness programs are broad and multifaceted, but measurable outcomes may only be relevant for small subsets of the population. Consider, for example, blood sugar and diabetes. We should only expect HbA1c (a blood sugar measure) to change as a result of the intervention for those either with diabetes or at risk for diabetes. The theory of change for reducing HbA1c as a result of insurance requires going to a provider, being assessed by a provider, and prescribed either a behavioral modification or medication. If most people do not have high blood sugar and are therefore not told by their provider to reduce it, we should not expect the intervention to affect Hba1c. If this complier group is small relative to the study sample, the intervention will be poorly targeted, and this will reduce power similarly to low take-up or other forms of noncompliance.

Suppose we have a sample of 1,000 people, 10 percent with high HbA1c, and another 10 percent who have near-high levels that would prompt their provider to offer treatment. Initial power calculations with a control mean of 10 percent produce an MDE of about 6 percentage points (for a binary high/low outcome). However, once we correct for 20 percent compliance, observing this overall effect requires a direct effect for compliers of roughly 30 percentage points. This is significantly larger and might make a seemingly well-powered study seem infeasible.

In cases where data from the sample is not yet available, broad based survey or administrative data can be used to measure population level (i.e. control group) outcome means and standard deviations and the prevalence of certain conditions, as well as investigate whether other variables are useful predictors of chosen outcomes and can be included in analysis to improve precision. As always, the feasibility of a study should be assessed using a wide range of possible scenarios.

- In the OHIE, researchers noted that they overestimated the prevalence of certain conditions in their sample. Ex-post, they found that only 5.1% had diabetes and 16.3% had high blood pressure. This limited the effective sample size in which one might expect an effect to be observed, effectively reducing take up and statistical power relative to expectations. One solution when relevant sample sizes are smaller than expected is to restrict analysis to subgroups expected to have larger effects ex ante, such as those with a preexisting condition, for which the intervention may be better targeted.

- In the workplace wellness study, the researchers note that they used data from the National Health and Nutrition Examination Survey, weighted to the demographics of the study population, to estimate control group statistics. These estimates proved quite accurate resulting in the expected power to detect effects.

In both cases, researchers proceeded with analysis while acknowledging the limits of their ability to detect effects.

Ensure a high response rate

Response rates have to be factored into anticipated sample size and given that sample size is a critical input for determining sufficient power, designing data collection methods to prevent attrition is an important strategy to maintain sample size throughout the duration of a study. Concerns about attrition grow when data collection requires in-person contact and medical procedures.8

- To ensure a high response rate in Oregon, researchers devoted significant resources to identifying and collecting data from respondents. This included several forms of initial contact, a tracking team devoted to locating respondents with out-of-date contact information, flexible data collection (interviews were done via several methods and health screenings could be performed in a clinic or at home), intensive follow up dedicated to a random subset of non-respondents, and significant compensation for participation ($30 for the interview, an additional $20 for the dried blood spot collection, and $25 for travel if the interview was at a clinic site). These efforts resulted in an effective response rate of 73%. Dried blood spots (a minimally invasive method of collecting biometric data) and short forms of typical diagnostic questionnaires were used to reduce the burden on respondents. These methods are detailed in the supplement to Baicker et al. 2013.

- In workplace wellness, health surveys and biometric data collection were done on site at workplaces and employees received a $50 gift card for participation. Some employees received an additional $150. Participation rates in the wellness program among current employees were just above 40%. However, participation in data collection was much lower — about 18% — primarily because less than half of participants ever employed during the study period were employed during data collection periods. Participants had to be employed at that point in the study, present on those particular days, and be willing to participate in order to be included in data collection.

Understand your results and what you can and cannot rule out

Both the OHIE and workplace wellness analyses produced null results on health outcomes, but not all null results are created equal.

- The OHIE results differed across outcome measures. There were significant improvements only in the rate of depression; depression was also the most prevalent condition of the four examined. There were no detectable effects on diabetes or blood pressure, but what could be concluded in each of these domains differed. Medicaid’s effect on diabetes was imprecise, with a wide confidence interval that included both no effect and large positive effects, including a very plausible positive effect. The results could not rule out the effect one might expect to find if you estimated how many people had diabetes, saw their doctor as a result of getting insurance, got medication, and how effective that medication is at reducing HbA1c (based on clinical trial results). This is not strong evidence of no effect. In contrast, Medicaid’s null effect on blood pressure could rule out much larger previous estimates from quasi-experimental work because its confidence interval did not include the larger estimates generated from quasi-experimental work (Baicker et al, 2013, The Oregon Experiment: Effects of Medicaid on Clinical Outcomes).

- The effects on health shown in Workplace Wellness were all nearly zero. Given that impact measurements were null across a variety of outcome measures and another randomized evaluation of a large scale workplace wellness program found similar results, it is reasonable to conclude that the workplace wellness program did not affect health significantly in the study time period.

Does health insurance not affect health?

New research has since demonstrated health impacts of insurance, but the small effect sizes emphasize why large sample sizes are needed. The IRS partnered with researchers to randomly send letters to taxpayers who paid a tax penalty for lacking health insurance coverage, encouraging them to enroll. They sent letters to 3.9 million out of 4.5 million potential recipients. The letters were effective at increasing health insurance coverage and in reducing mortality, but the effects on mortality were small: among middle aged adults (45-64 years old) they saw a 0.06 percentage point decline in mortality, one fewer death for about 1,600 letters.

Being powered for subgroup analysis and heterogeneous effects

Policymakers, implementing partners, and researchers may be interested in for whom a program works best, not only in average treatment effects for a study population. However, subgroup analysis is often underpowered and increases the risk of false positive results due to the larger number of hypotheses being tested. Care must be taken to balance the desire for subgroup analysis and the need for sufficient power.

Talk to partners and set expectations about subgroups

Set conservative expectations with partners before analysis begins about what subgroup analyses may be feasible and what can be learned from them. Underpowered comparisons and large numbers of comparisons should be treated with caution as the likelihood of Type I errors (false positives) will be high. These result from multiple hypothesis testing and because underpowered estimation, conditional on finding a statistically significant effect, will overestimate the true effect.

Conduct power calculations for the smallest relevant comparison

If a study is well-powered for an average treatment effect, it may not be powered to detect effects within subgroups. Power calculations should be done using the sample size of the smallest relevant subgroup. Examining heterogeneous treatment effects (i.e. determining whether effects within subgroups are different from each other) require even more sample size to be powered.9

Stratify to improve power

Stratifying on variables that are strong predictors of the outcome can improve power by guaranteeing that these variables are balanced between treatment and control groups. Stratifying by subgroups may improve power for subgroup analyses. However, stratifying on variables that are not highly correlated with the outcome may reduce statistical power by reducing the degrees of freedom in the analysis.10

Ground subgroups in theory

Choose subgroups based on the theory of change of the program being evaluated. These might be groups where you expect to find different (e.g., larger or smaller) effects or where results are particularly important. Prespecifying and limiting the number of subgroups that will be considered can guard against concerns about specification searching (p hacking) and force researchers to consider only the subgroups that are theoretically driven. It is also helpful to pre-specify any adjustments for multiple hypothesis testing.

When examining differential effects among subgroups is of interest, but it is not possible to pre-specify relevant groups, machine learning techniques may allow researchers to flexibly identify data-driven subgroups, but this also requires researchers to impute substantive meaning for the subgroups, which may not always be apparent.11

- In OHIE, researchers prespecified subgroups for which effects on clinical outcomes might have been stronger: older individuals, those with an existing diagnosis of hypertension, high cholesterol, or diabetes, and those with a heart attack or congestive heart failure. Even by doing this, the researchers found no significant improvements in these particular dimensions of physical health over this time period.

- In an Evaluation of Voluntary Counseling and Testing (VCT) in Malawi that explored the effect of a home based HIV testing and counseling intervention on risky sexual behaviors and schooling investments, researchers identified several relevant subgroups for which effects might differ: HIV-positive status, HIV-negative status, HIV-positive status with no prior belief of HIV infection, and HIV-negative status with prior belief of HIV infection. Though the program had no overall effect on risky sexual behaviors or test scores, there were significant effects within groups where test results corrected prior beliefs. Those who had an HIV-positive status but did not have a prior belief of HIV infection engaged in more dangerous sexual behaviors, and those who were surprised by a negative test experienced a significant improvement in achievement test scores (Baird et al 2014).

- An evaluation that used discounts and counseling strategies to incentivize the use of long term contraceptives in Cameroon used causal forests to identify subgroups that were more likely to be persuaded by price discounts. Causal forests are a machine learning technique to identify an optimal strategy for splitting a sample into groups. Using this approach, the researchers found that clients strongly affected by discount prices are younger, more likely to be students and have higher levels of education. Given this subgroup analysis, the researchers found that discounts increased the use of contraceptives by 50%, with larger effects for adolescents (Athey et al, 2021). Researchers pre-specified this approach without having to identify the actual subgroups in advance.

Acknowledgments: Thanks to Amy Finkelstein, Berk Özler, Jacob Goldin, James Greiner, Joseph J Doyle, Katherine Baicker, Maggie McConnell, Marcella Alsan, Rebecca Dizon-Ross, Zirui Song and all the researchers included in this resource for their thoughtful contributions. Thanks to Jesse Gubb and Laura Ruiz-Gaona for their insightful edits and guidance, as well as to Amanda Buechele who copy-edited this document. Creation of this resource was supported by the National Institute On Aging of the National Institutes of Health under Award Number P30AG064190. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

1 For more information on statistical power and how to perform power calculations, see the Power Calculations resource; for additional technical background and sample code, see the Quick Guide to Power Calculations; for practical tips on conducting power calculations at the start of a project and additional intuition behind the components of a well-powered study, see Six Rules of Thumb for Determining Sample Size and Statistical Power.

2 The practice of designating a primary outcome is common in health care research and is described in the checklist for publishing in medical journals.

3 The challenge of differential coverage in administrative data is discussed at length in the resource on using administrative data for randomized evaluations.

4 The exception would be events, like births or deaths, that are guaranteed to appear in administrative data unaffected by post-treatment selection bias. In an evaluation of the Nurse Family Partnership in South Carolina researchers were able to measure adverse birth outcomes like preterm birth and low birth weight from vital statistics records.

5 Program take up has an outsize effect on power. A study with 50% take up requires four times the sample size to be equally powered as one with 100% take up, because sample size is inversely proportional to the square of take up. See Power Calculations 101: Dealing with Incomplete Take-up (McKenzie 2011) for a more complete illustration of the effect of the first stage of an intervention on power.

6 Health insurance that the government provides – either for free or at a very low cost – to qualifying low-income individuals.

7 In the OHIE, 5 blood spots were collected and then dried for analysis.

8 More information about how to increase response rates in mail surveys can be found in the resource on increasing response rates of mail surveys and mailings.

9 More on the algebraic explanation can be found in the following post “You need 16 times the sample size to estimate an interaction than to estimate a main effect”.

10 One would typically include stratum fixed effects in analysis of stratified data. The potential loss of power would be of greater concern in small samples where the number of parameters is large relative to the sample size.

11More on using machine learning techniques for pre-specifying subgroups in the following blog post: “What’s new in the analysis of heterogeneous treatment effects?“

- What is the risk of an underpowered randomized evaluation?

- Power Calculations

- Quick guide to power calculations

- Six Rules of Thumb for Determining Sample Size and Statistical power

- Increasing response rates of mail surveys and mailings

- Real-Word Challenges to Randomization and their Solutions

- Pre-Analysis Plans

Evaluation Summaries

- Healthcare Hotspotting in the United States

- Randomized Evaluation of the Nurse Family Partnership in South Carolina

- Reducing Nurse Burnout through Online Social Support

- Workplace Wellness Programs to Improve Employee Health Behaviors in the United States

- The Oregon Health Insurance Experiment in the United States

- Voluntary Counseling and Testing (VCT) to Reduce Risky Sexual Behaviors and Increase Schooling Investments in Malawi

- Prescribing Food as Medicine among Individuals Experiencing Diabetes and Food Insecurity in the United States

- Matching Provider Race to Increase Take-up of Preventive Health Services among Black Men in the United States

Research Papers

- Athey, Susan, Katy Bergstrom, Vitor Hadad, Julian Jamison, Berk Özler, Luca Parisotto, and Julius Dohbit Sama. “Shared Decision-Making Can Improved Counseling Increase Willingness to Pay for Modern Contraceptives?” World Bank Document, September 2021.

- Baicker, Katherine, Sarah L. Taubman, Heidi L. Allen, Mira Bernstein, Jonathan H. Gruber, Joseph P. Newhouse, Eric C. Schneider, Bill J. Wright, Alan M. Zaslavsky, and Amy N. Finkelstein. “The Oregon Experiment — Effects of Medicaid on Clinical Outcomes.” New England Journal of Medicine 368, no. 18 (2013): 1713–22.

- Baicker, Katherine et al. “Supplementary Appendix —The Oregon Experiment: Effects of Medicaid on Clinical Outcomes.” New England Journal of Medicine 368, no. 18 (2013).

- Baird, Sarah, Erick Gong, Craig McIntosh, and Berk Özler. “The heterogeneous effects of HIV testing”. Journal of Health Economics (2014).

- Brookes, ST et al. “Subgroup Analyses in Randomised Controlled Trials: Quantifying the Risks of False-Positives and False-Negatives.” Health technology assessment (Winchester, England). U.S. National Library of Medicine.

- Finkelstein, Amy, Annetta Zhou, Sarah Taubman, and Joseph Doyle. “Health Care Hotspotting — a Randomized, Controlled Trial.” New England Journal of Medicine 382, no. 2 (2020): 152–62.

- Goldin, Jacob, Ithai Lurie, and Janet McCubbin. “Health Insurance and Mortality: Experimental Evidence from Taxpayer Outreach.” NBER, December 9, 2019.

- Song, Zirui and Katherine Baicker. “Effect of a Workplace Wellness Program on Employee Health and Economic Outcomes: A Randomized Clinical Trial”. National Library of Medicine, JAMA, April, 2019.

- Song, Zirui and Katherine Baicker. “Health And Economic Outcomes Up To Three Years After A Workplace Wellness Program: A Randomized Controlled Trial”. National Library of Medicine, JAMA, June, 2021.

- Greiner, James, Cassandra Wolos Pattanayak and Jonathan Hennessy. “How Effective Are Limited Legal Assistance Programs? A Randomized Experiment in a Massachusetts Housing Court”. SSRN, March, 2012.

Others

- “Health Expenditures by State of Residence, 1991-2020” Centers for Medicaid and Medicare Services. Accessed February 24, 2023.

- Camden Coalition

- Nurse Family Partnership

- Gelman, Andrew. “Statistical Modeling, Causal Inference, and Social Science.” Statistical Modeling Causal Inference and Social Science, March 15, 2018.

- Bulger, John, and Joseph Doyle. “Fresh Food Farmacy: A Randomized Controlled Trial - Full Text View.” ClinicalTrials.gov, August 12, 2022.

- Kondylis, Florence, and John Loeser. “Back-of-the-Envelope Power Calcs.” World Bank Blogs, Jan 29, 2020. Accessed March 2, 2023.

- Özler, Berk. “What's New in the Analysis of Heterogeneous Treatment Effects?” World Bank Blogs, May 16, 2022.

For more information on statistical power and how to perform power calculations, see the Power Calculations resource; for additional technical background and sample code, see the Quick Guide to Power Calculations; for practical tips on conducting power calculations at the start of a project and additional intuition behind the components of a well-powered study, see Six Rules of Thumb for Determining Sample Size and Statistical Power.

Acquiring and using administrative data in US health care research

Summary

This resource provides guidance on acquiring and working with administrative data for researchers working in US health care contexts, with a particular focus on how the Health Insurance Portability and Accountability Act (HIPAA) structures data access. It illustrates the concepts with examples drawn from J-PAL North America’s experience doing research in this area. This resource assumes some knowledge of administrative data, IRBs, and the Common Rule. Readers seeking a comprehensive overview of how to obtain and use nonpublic administrative data for randomized evaluations across multiple contexts should consult the resource on using administrative data for randomized evaluations.

Disclaimer: This document is intended for informational purposes only. Any information related to the law contained herein is intended to convey a general understanding and not to provide specific legal advice. Use of this information does not create an attorney-client relationship between you and MIT. Any information provided in this document should not be used as a substitute for competent legal advice from a licensed professional attorney applied to your circumstances.

Introduction

There are a number of advantages to using administrative data for research, including cost, reduced participant burden and logistical burden for researchers, near-universal coverage and long term availability, accuracy, and potentially reduced bias. For health research in particular, administrative data contains precise records of health care utilization, procedures, and their associated cost, which would be difficult or impossible to learn from surveying participants directly.1

Despite these advantages, a key challenge for research in health care contexts in the United States is acquiring administrative data when researchers are outside the institution that generated the data. Protected Health Information (PHI) governed by the Health Insurance Portability and Accountability Act (HIPAA) makes health care data especially sensitive and challenging for researchers to acquire, which may cause lengthy negotiation processes and confusion about the appropriate level of protection for transferring and using the data.

This resource covers:

- The relationships between HIPAA, human subjects research regulations, and researchers

- Levels of data defined by HIPAA

- Important considerations for making a health care data request

- Compliance with IRBs and DUAs

"An example of the value of administrative data over survey data can be seen in the Oregon Health Insurance Experiment’s study of the impact of covering uninsured low-income adults with Medicaid on emergency room use. This randomized evaluation found no statistically significant impact on emergency room use when measured in survey data, but a statistically significant 40 percent increase in emergency room use in administrative data (Taubman, Allen, Wright, Baicker, & Finkelstein 2014). Part of this difference was due to greater accuracy in the administrative data than the survey reports; limiting to the same time periods and the same set of individuals, estimated effects were larger in the administrative data and more precise” (Finkelstein & Taubman, 2015).

What is HIPAA and how does it affect researchers?

In the United States, the Privacy Rule of the Health Insurance Portability and Accountability Act (HIPAA) regulates the sharing of health-related data generated or held by health care entities such as hospitals and insurance providers. HIPAA imposes strict data protection requirements with strict penalties and liability for non-compliance to the health care entities that it regulates (known as covered entities). These regulations allow, but do not require, sharing data for research. These regulations augment the more general research protections codified in the Common Rule—the US federal policy for the protection of human subjects that outlines the criteria and mechanisms for IRB review—sometimes in overlapping and confusing ways.

Researchers (in most cases) are not covered entities, however researchers must understand HIPAA requirements in order to interact with data providers who are bound by them. Many of the obligations to protect data under HIPAA will be passed to the researcher and their institution through data use agreements (DUAs) executed with covered entities.2 HIPAA imposes restrictions on what and how covered entities may disclose for research. HIPAA introduces distinct categories of data for health care research that researchers should understand, as they dictate much of the compliance environment a particular research project will fall under. In addition, researchers must understand how HIPAA requirements interact with requirements from the Common Rule. Note that HIPAA only applies in the United States and only to health care data; other topic areas and countries may have their own compliance standards.3

What kind of data does HIPAA cover?

The HIPAA Privacy Rule is a US federal law that covers disclosure of Protected Health Information (PHI) generated, received, or maintained by covered entities and their business associates. Covered entities are health care providers, health plans, and health care clearinghouses (data processors). Business associates include organizations that perform functions on behalf of a covered entity that may involve protected health information. In the research context, researchers may interact with business associates who work as data processors, data warehouses, or external analytics or data science teams. Data from covered entities used in research may include “claims” data from billing or insurance records, hospital discharge data, data from electronic medical records like diagnoses and doctors’ notes, or vital statistics like dates of birth.

Protected health information (PHI) refers to identifiable health data maintained or received by a covered entity or its business associates. More specifically, the HIPAA Privacy Rule defines 18 identifiers and 3 levels of data — research identifiable data, also known as research identifiable files (RIF), limited data sets (LDS) and de-identified data — each with different requirements depending on their inclusion of these identifiers, detailed in Table 1 below. Data without any of the 18 identifiers is considered de-identified, does not contain PHI, and is therefore not restricted by HIPAA. The inclusion of any identifiers means that the data is subject to at least some restrictions. Notably, between fully identified and de-identified data, HIPAA defines a middle category — limited data — that contains some potentially-identifiable information and is subject to some but not all restrictions. As a result, the extent to which a data request must comply with HIPAA depends on the level of protected health information contained in the data to be disclosed.

Not all health data is protected health information (PHI) governed by HIPAA. Health information gathered by researchers themselves during an evaluation is not PHI, though it would be personally identifiable information subject to human subject protections under the Common Rule.4 Not all secondary data providers are necessarily covered entities either. Student health records from a university-affiliated medical center, for example, are governed by the education privacy law FERPA rather than HIPAA, because FERPA offers stronger privacy protections. Researchers should confirm whether their implementing partner or data provider is a covered entity, as some organizations will incorrectly assume they are subject to HIPAA, invoking unnecessary review and regulation. For guidance ondetermining whether an organization is subject to the HIPAA Privacy Rule, the US Department of Health & Human Services (HHS) defines a covered entity and the Centers for Medicare & Medicaid Services (CMS) provides a flowchart tool.

Levels of data

Research identifiable data

Research identifiable data contain sufficient identifying information such that the data may be directly matched to a specific individual. Under HIPAA this means any identifiers (such as name, address, or record numbers) not including the dates and locations allowed in limited data sets (LDS). A list of variables that make a dataset identifiable under the HIPAA Privacy Rule can be found in Table 1. Identifiable data may only be shared for research purposes with individual authorization from each patient or a waiver of authorization approved by an institutional review board (IRB) or privacy board (a process similar to informed consent or waivers of consent as required by the Common Rule). This level of data is typically not necessary to conduct analysis for impact evaluations if a data provider or third party is able to perform data linkages on the researcher’s behalf. Researchers may need to acquire identified data to perform data linkages or because the data provider is not able or willing to remove identifiers prior to sharing the data.

Researchers receiving research identifiable files must take pains to protect data with strong data security measures and by removing and separately storing identifiers from analysis data after they are no longer needed (a J-PAL minimum must do). Researchers should expect extensive negotiations and review of their data request and to receive IRB approval and execute a DUA prior to receiving data. Protecting participant data confidentiality is a vital component of conducting ethical research.

Limited data sets

The HIPAA Privacy Rule defines limited data sets as those that contain geographic identifiers smaller than a state (but less exact than street address) or dates related to an individual (including dates of medical service and birthdates for those younger than 90) but do not otherwise contain HIPAA identifiers. For research purposes, this geographic and time information (such as hospital admission and discharge dates) can be particularly useful for analysis. This makes limited data a particularly useful class of administrative health data that balances ease of access with ease of use.

Limited data sets may be shared by a covered entity with a data use agreement (DUA).5 HIPAA does not require individual authorization or a waiver of authorization. Researchers should always seek IRB approval for research involving human subjects or their data, but it is possible that research using only a limited data set may be determined exempt or not human subjects research by an IRB if there is no other involvement of human subjects. Using a limited data set for randomized evaluations typically means that another party besides the researcher, such as the data provider or an intermediary, must link data to evaluation subjects.