Displaying 4126 - 4140 of 8449

Evaluation

Researchers conducted a randomized evaluation to test the impact of lottery-based financial awards given to young individuals who tested STI-negative on their likelihood of contracting HIV and engaging in risky sexual behavior. The intervention provided frequent rewards at short intervals to bring the benefits of safe sex closer to the present and used a lottery design to try to target higher-risk individuals. Lottery-based financial incentives reduced the prevalence and incidence of HIV by 12 percent and 21 percent respectively, and their impact was largest among individuals with a high tolerance for risk.

Evaluation

In Uganda, researchers evaluated whether offering financial education or group savings accounts to church-based youth groups increased savings. One year after the intervention ended, they found that total savings and income had increased among youth who were offered financial education, group savings accounts, or both education and group accounts.

Evaluation

Researchers conducted a randomized evaluation to test the impact of targeting cash transfers to women on household spending and women’s empowerment. Targeting the cash transfer to mothers increased household spending on food. Women offered the transfers also had stronger measures of empowerment through increasing women’s control of household resources.

Evaluation

This study evaluated the impact of a preschool nutrition and health project that targeted anemia in the slums of Delhi, India on child health and school attendance. Results showed the program positively impacted weight-gain and school attendance, particularly for groups with high base-line rates of anemia.

Evaluation

Researchers assessed the impact of a recreational tutoring program on students’ academic achievements and interest for school work provided by the NGO Apfee. Researchers found that children identified as falling behind by their teachers in schools where the tutoring program was offered did not improve their reading or math skills, although they developed a taste for reading and academic subjects. These results hold two years after the implementation of the program.

Evaluation

Researchers tested a fee-for-service scheme, a type of performance pay, at health facilities in the Democratic Republic of Congo to evaluate its impact on health service utilization. While fee-for-service facilities invested more effort in attracting patients, this increase did not translate into higher levels of service utilization or better health outcomes. Additionally, health workers in fee-for-service facilities became less intrinsically motivated and less satisfied with their jobs compared to their counterparts in fixed payment facilities.

Evaluation

Researchers measured the impact of different interest rates loan take-up, amount, and repayment rates among female clients of Compartamos Banco in Mexico. Lower interest rates led to an increase in the number of loans issued, loan amounts, and new borrowers but did not increase profits in the short term.

Evaluation

Researchers partnered with Partners in Health (PIH) to conduct a randomized evaluation to test the impact of monthly coupons and different CHW delivery methods on chlorine usage and child health outcomes in Southern Malawi. They find that the coupon program had stronger impacts on both outcomes and was more cost-effective than having CHWs distribute free chlorine to households during routine monthly visits.

Evaluation

In Rwanda, researchers conducted a randomized evaluation to compare the impact of Huguka Dukore, a youth employment and training program, to that of cash grants of an equivalent value on several economic outcomes. Huguka Dukore improved hours worked, assets, savings, and subjective well-being, while cost-equivalent cash transfers increased all these outcomes as well as consumption, income, and wealth.

Evaluation

Researchers evaluated the impacts of a $68 million infrastructure investment program in Mexico on urban residents’ access to infrastructure, property values, private investment, moving rates, and community cohesion. The study found that access to infrastructure and property values improved in neighborhoods participating in the program. Private investment in these neighborhoods also increased; moving rates decreased, and data suggests that safety improved as a result of the program.

Evaluation

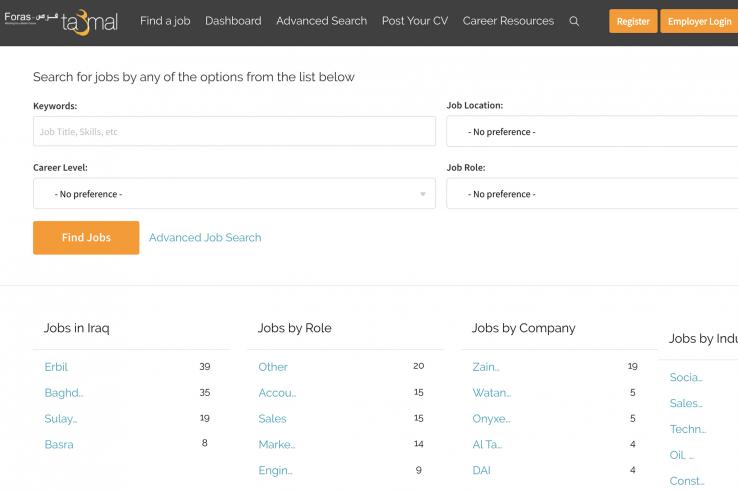

Researchers evaluated the whether providing objective information helps jobseekers target better-suited jobs.

Evaluation

Researchers are partnering with a leading mobile network operator to investigate how the internet affects financial and economic outcomes, particularly for women.

Evaluation

Researchers evaluated the impact of offering either subsidized vocational training to unemployed youth or subsidized apprenticeships for firms on youth employment and earning outcomes in urban Uganda. Both forms of subsidized training led to greater skill accumulation, higher employment rates, and higher earnings for workers; however, the gains were larger for vocational trainees and were sustained over time.

Evaluation

Researchers worked with existing savings clubs in Kenya to study the effect of two interventions on savings: the provision of communal crop storage devices and the provision of savings accounts earmarked for farm purchases. Researchers find that the products were popular: about 56% of farmers took up the products. Respondents in the maize storage intervention were 23 percentage points more likely to store maize (on a base of 69%), 37 percentage points more likely to sell maize (on a base of 36%) and (conditional on selling) sold later and at higher prices.

Evaluation

In Zambia, researchers examined the impact of access to seasonal credit on farming households’ consumption, labor allocation, and agricultural output. The results suggest that access to food and cash loans during the lean season increased agricultural output and consumption, decreased off-farm labor, and increased local wages.